The "Inpainting from Text Prompt" tool is a powerful template now available in the AI-FLOW app. This guide will walk you through how this tool can revolutionize your image editing tasks, demonstrate how to use it effectively, and compare it to other methods within the app.

Why Choose the Inpainting from Text Prompt Template?

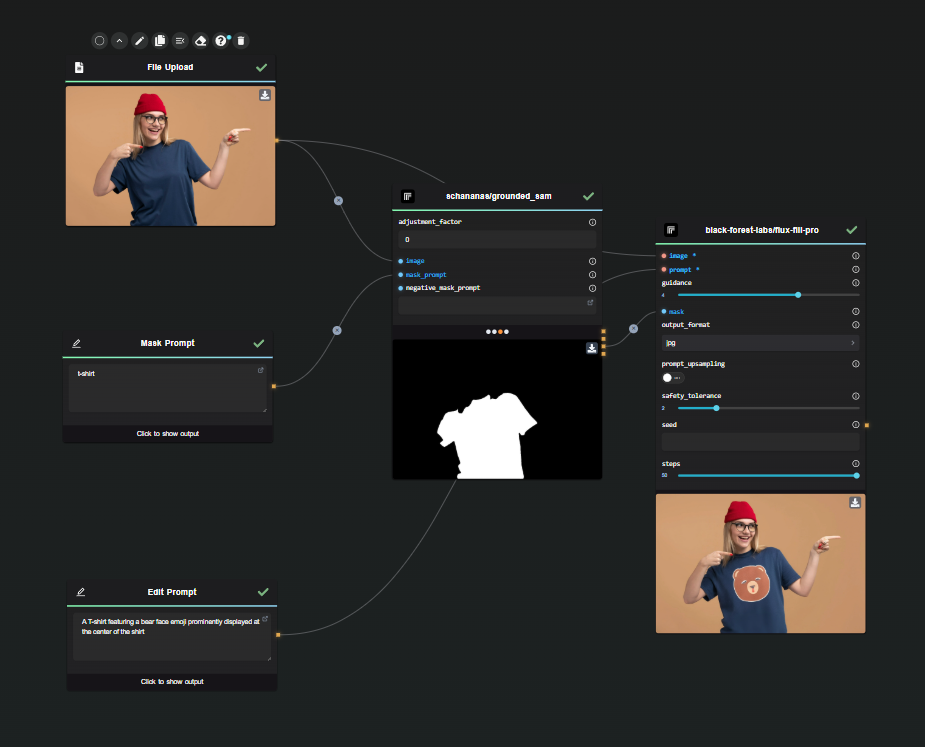

This tool combines the power of Grounded SAM and FLUX Fill Pro to deliver precision and flexibility in text-based image editing. It enables users to modify images seamlessly using simple text prompts, making it an ideal choice for designers, digital artists, marketers, and developers. Whether you're an experienced professional or a beginner, this tool simplifies complex image editing tasks, saving you time and effort.

How It Works

The Inpainting from Text Prompt tool utilizes advanced AI models to achieve high-quality results:

- Grounded SAM: Offers robust solutions for complex masking tasks by generating masks from textual prompts. Grounding DINO serves as a zero-shot detector, creating precise bounding boxes and labels based on free-form text.

- FLUX Fill Pro: Enhances these capabilities with state-of-the-art inpainting, ensuring that edits naturally blend with the original image by preserving lighting, perspective, and context.

Insights from Practical Use

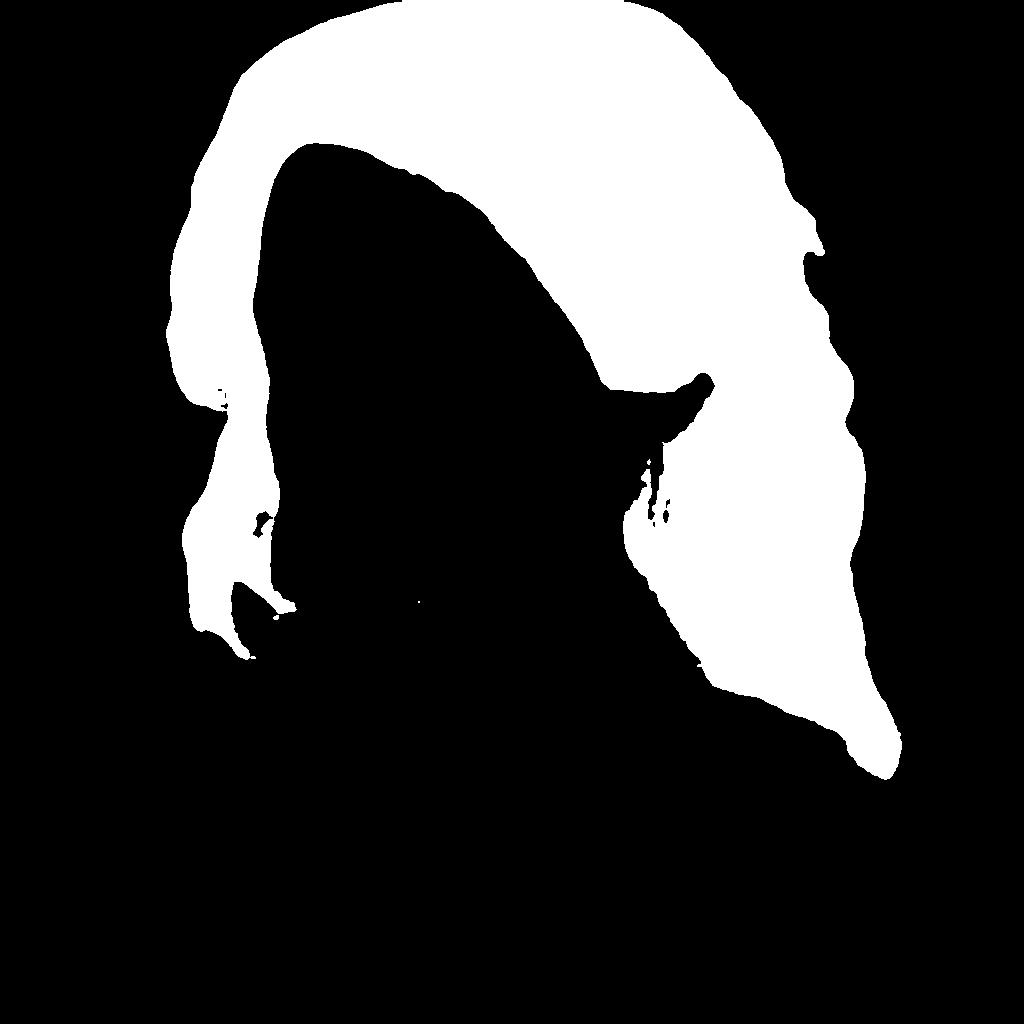

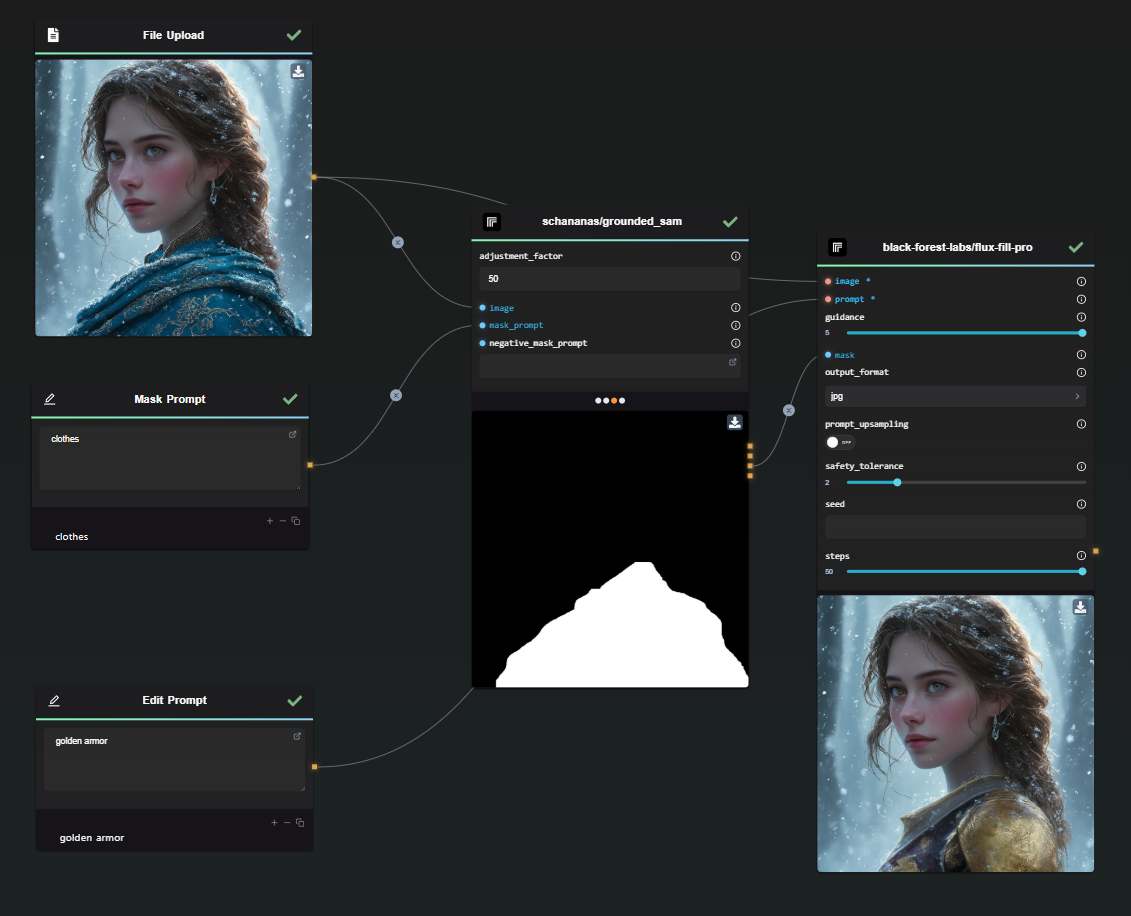

The mask selection generated by Grounded SAM is highly accurate and identifies key elements effortlessly. However, the precise matching can limit flexibility when resizing or reshaping objects. For instance, in the example of the armor, the tool maintains the original shape, resulting in visible remnants, such as background clothing.

To adjust the mask behavior, you can experiment with the "adjustment_factor" parameter of grounded_sam. A positive value will dilate the mask, while a negative value will erode it.

Below are two results with an adjustment_factor of 50:

As shown, the larger mask appears to allow the FLUX Fill model to make better use of the available space. The remnants of clothing are no longer visible.

You can use this tool for edits that preserve the original container's shape. An excellent example is retexturing or recoloring an object like this t-shirt:

This programmatic solution is better suited for batch-editing similar files with consistent container shapes rather than intricate individual edits, such as modifying the golden armor.

Input and Output

- Inputs: Image files, text prompts for mask generation and inpainting, and adjustable mask parameters. Experiment with "guidance", and "adjustment_factor" settings to optimize results.

- Outputs: Edited images with seamlessly integrated changes while maintaining the quality of the original photo.

Potential Use Cases

The versatility of the "Inpainting from Text Prompt" tool makes it invaluable in various scenarios:

- Designers: Easily modify product images for e-commerce.

- Marketers: Customize visuals for campaigns while maintaining brand identity.

- Artists: Efficiently create variations of existing artwork, exploring new creative directions.

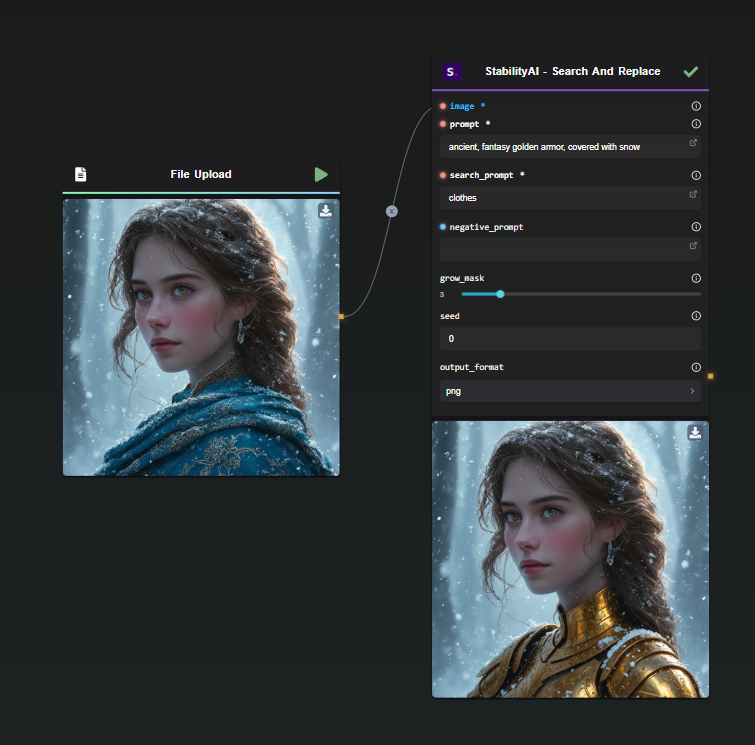

Alternative Methods

The StabilityAI Node provides "Search and Replace" and "Search and Recolor" models, which achieve similar results. While these models lack direct mask verification, they excel in small edits and offer a parameter to "grow" masks. This allows replacing an object with a larger one, though it risks unintended edits.

Here is an example with the armor, the modification integrates less well than with FLUX Fill, but I settled for a basic prompt. On the other hand, the shape is great. For each example I left the "grow" parameter at 3.

And a t-shirt example:

To use this alternative, select the "Search and Replace" template in the app, or use the StabilityAI Node directly.

Ready to Enhance Your Image Editing Experience?

With AI-FLOW, you can integrate this tool into your workflows, automate processes, and build custom AI tools without extensive coding expertise. Explore the potential of the "Inpainting from Text Prompt" tool today by visiting AI-FLOW App. Unleash your creativity and elevate your projects with AI-powered image editing!

Additional Resources

For more detailed information, refer to the following resources: