Product Description with AI: A Comprehensive Guide

Creating compelling product descriptions is essential for any successful online business. Whether you're selling homemade soaps or high-tech gadgets, the right words can help you connect with customers and drive more sales. However, generating engaging, informative, and SEO-optimized product descriptions can be challenging, especially if you're working alone or have a small team. This is where AI-Flow's "Generate Product Description" template comes in, enabling entrepreneurs and marketers to streamline the content creation process effortlessly.

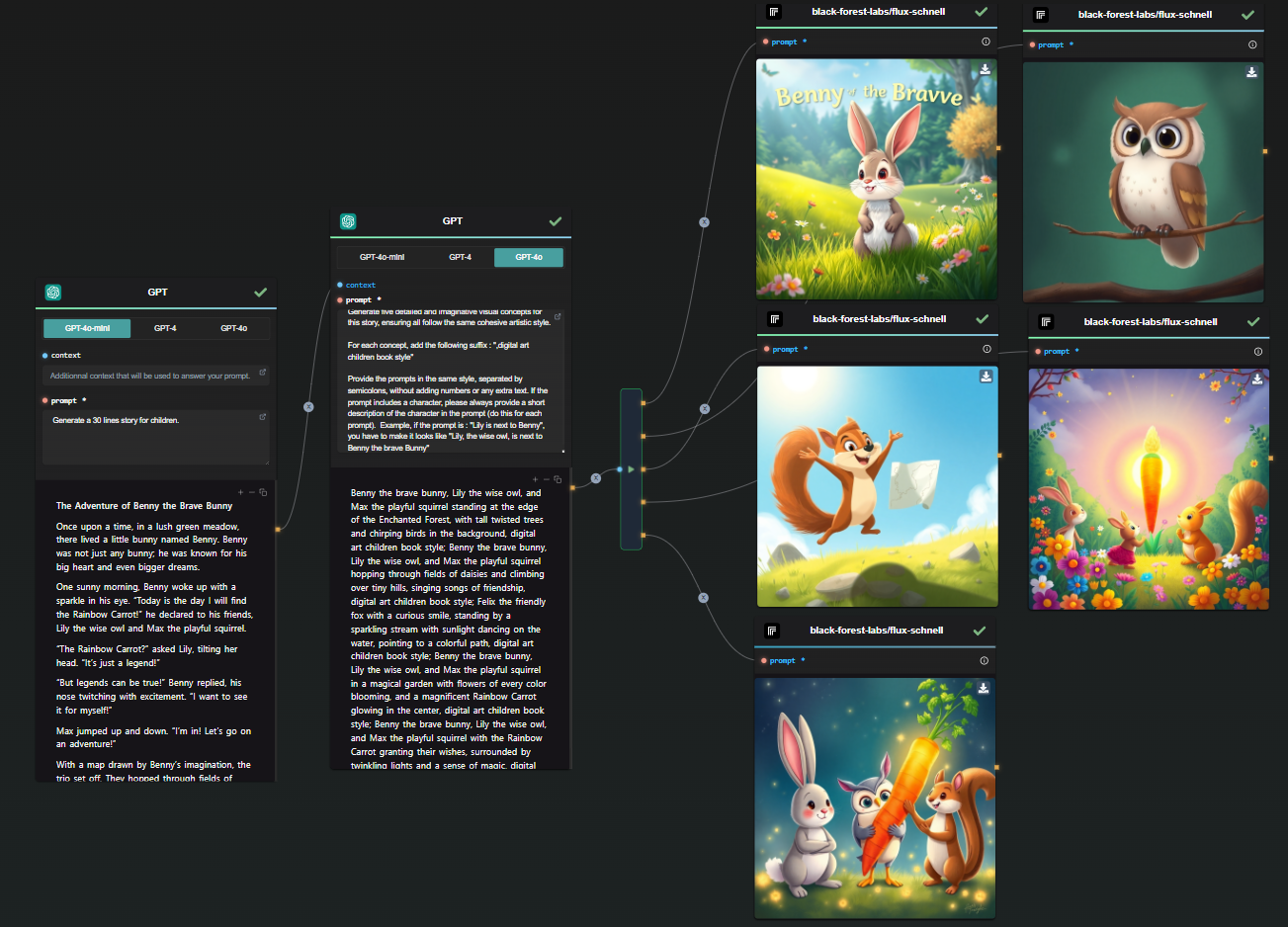

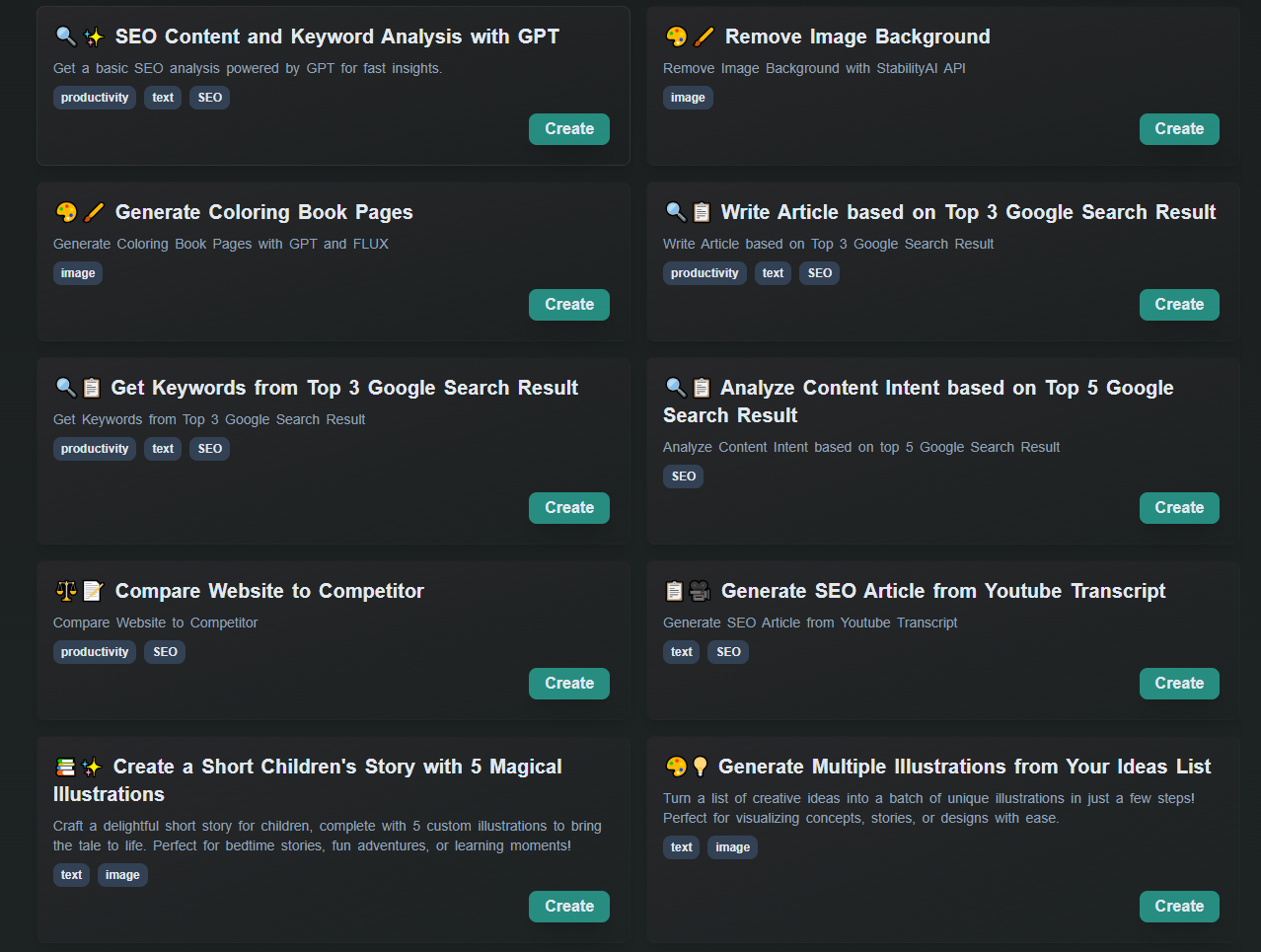

Introduction to the "Generate Product Description" Template

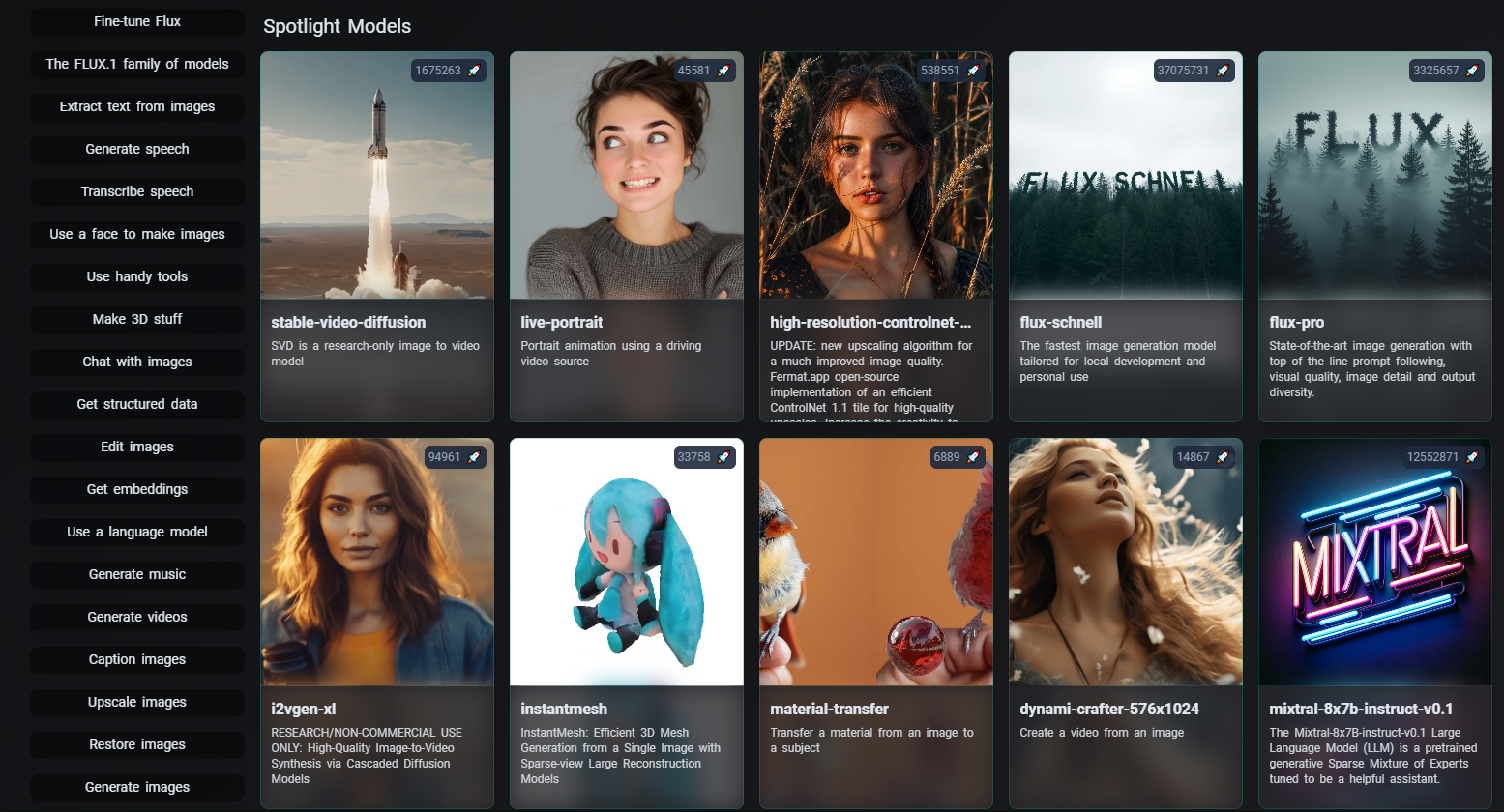

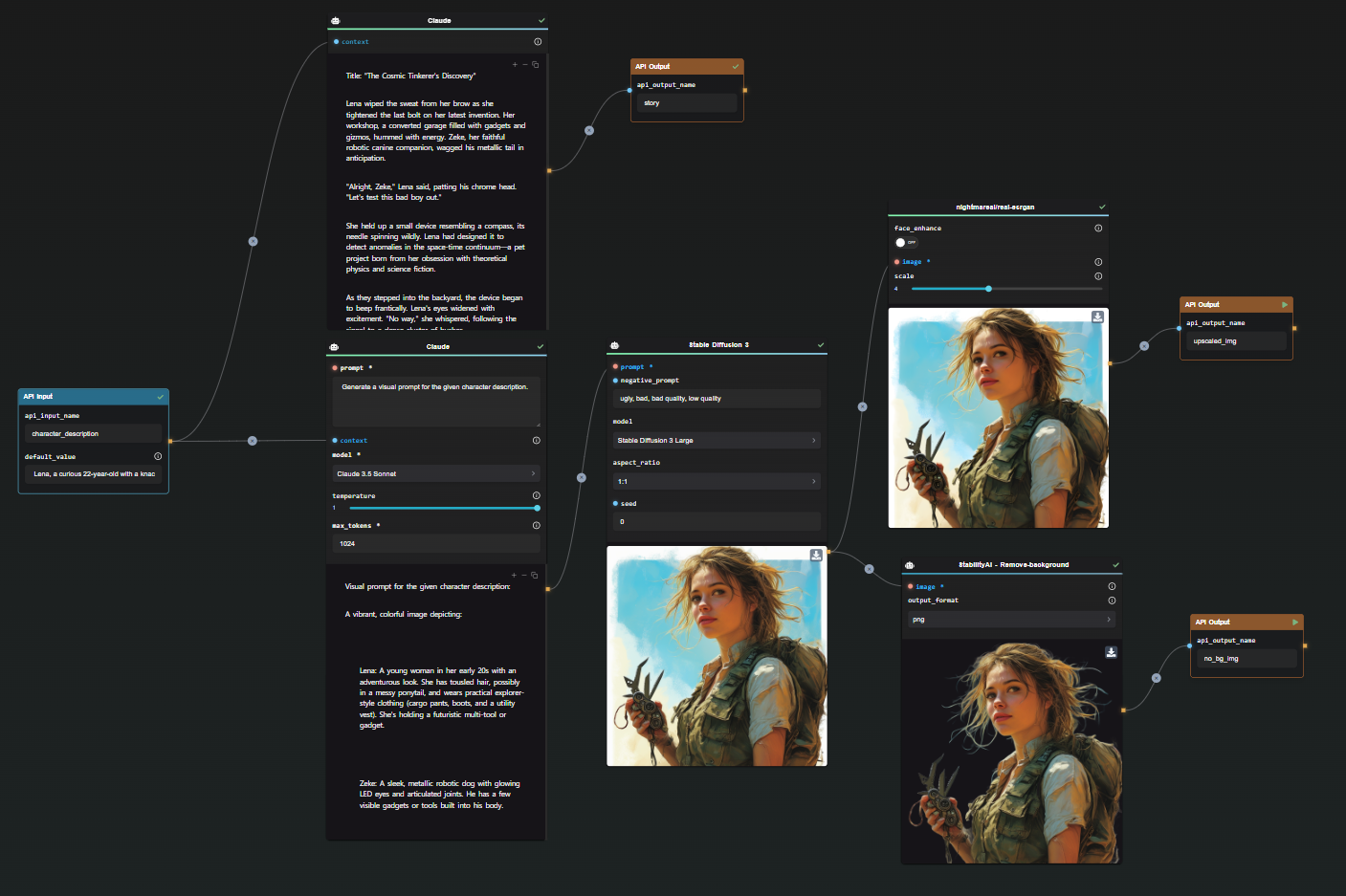

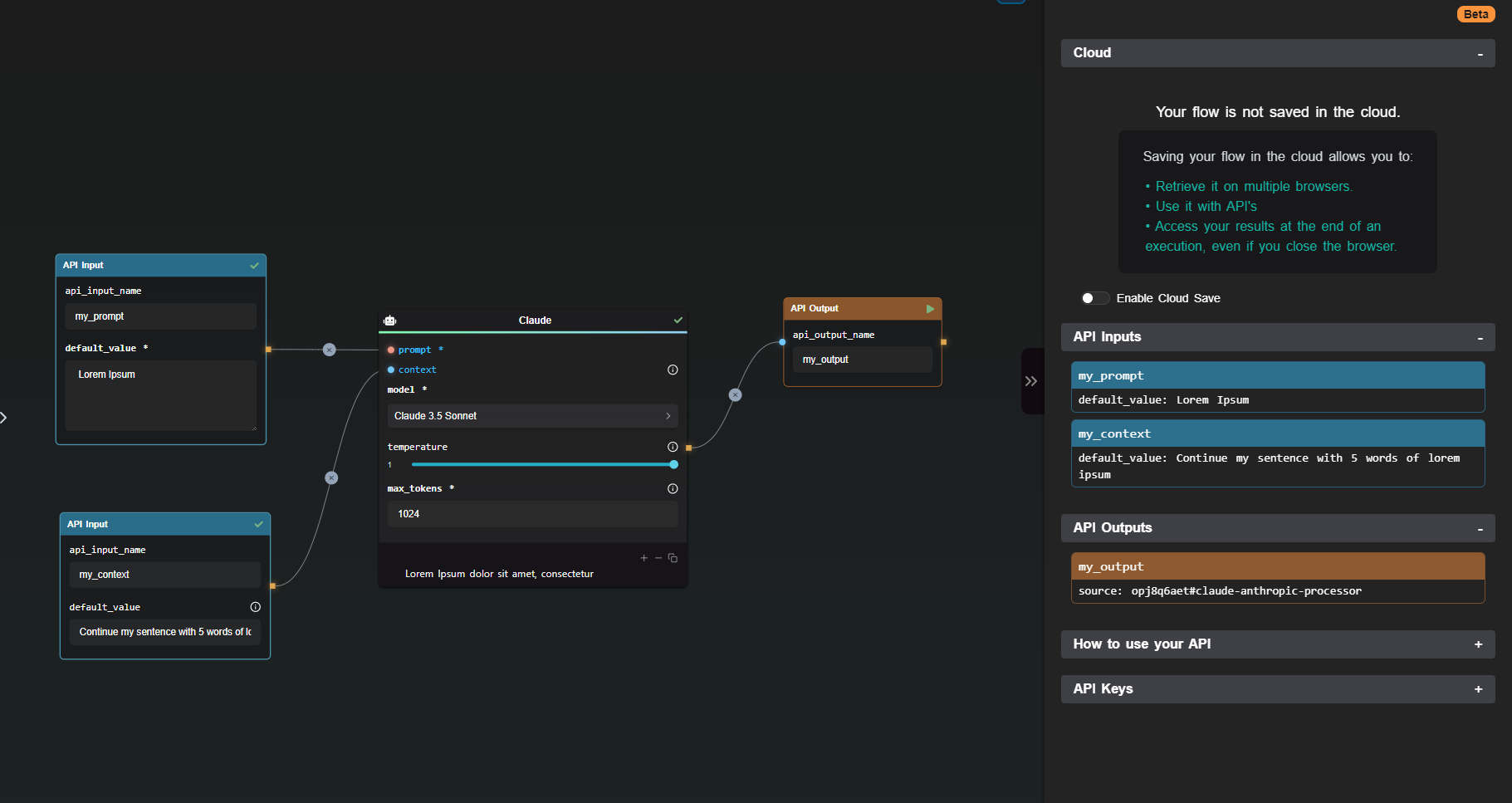

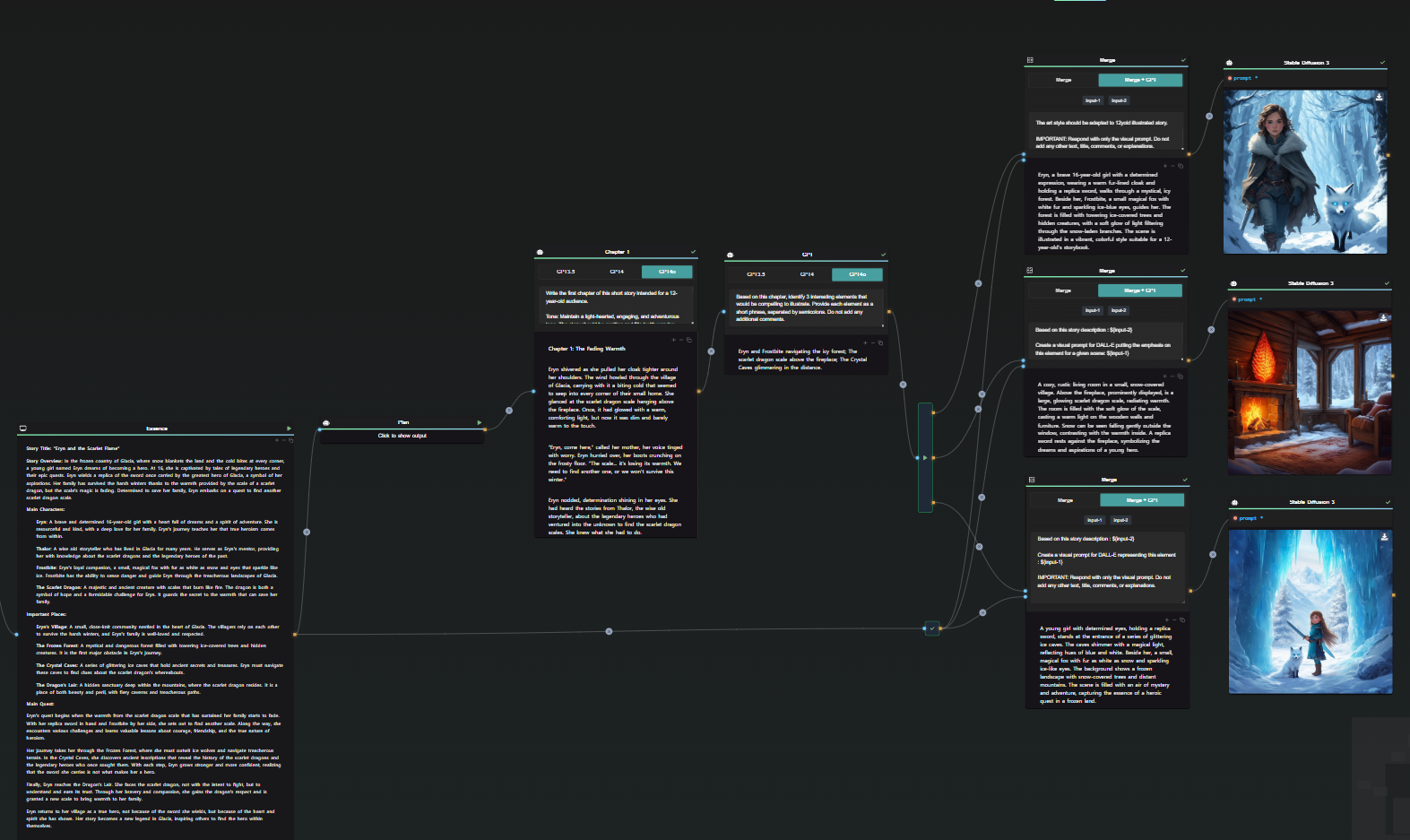

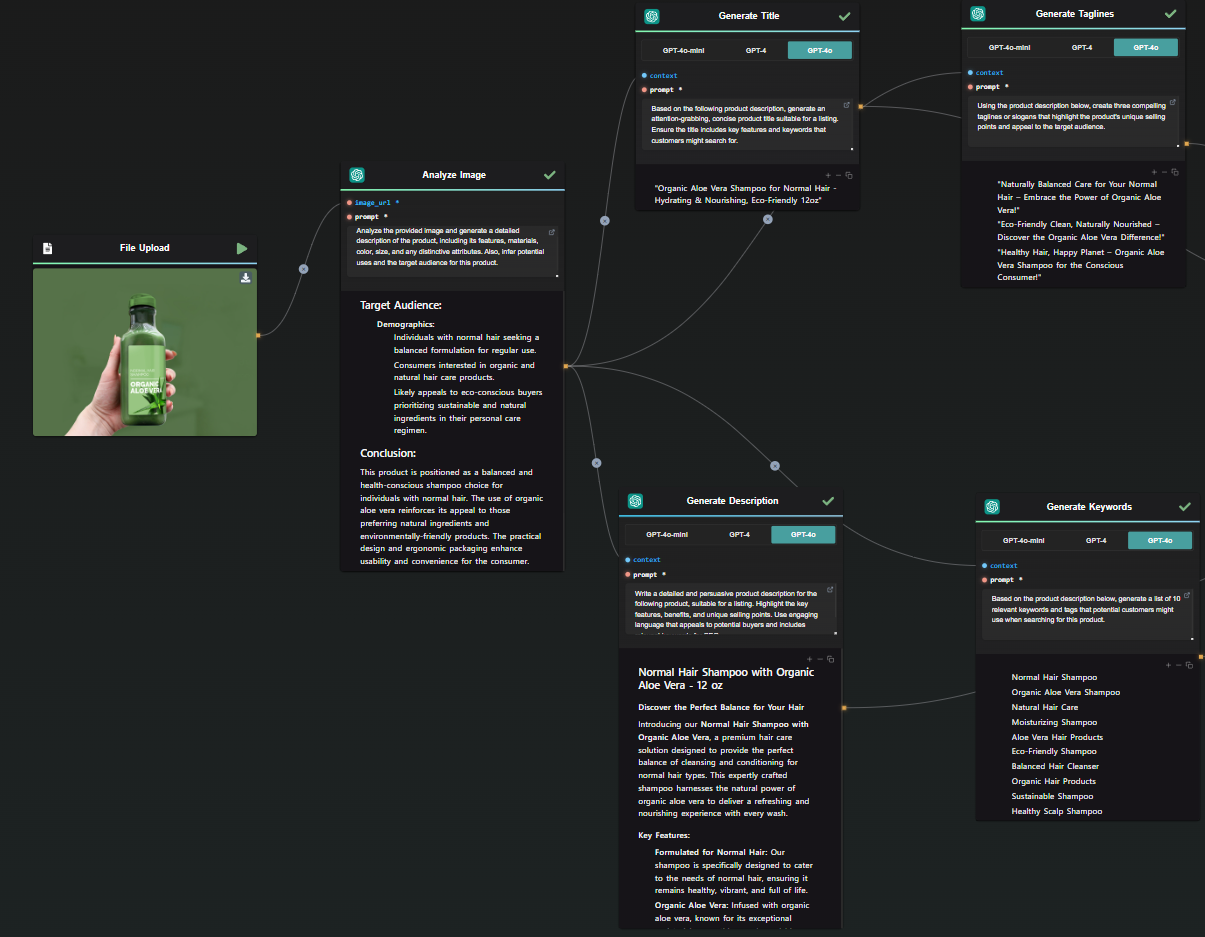

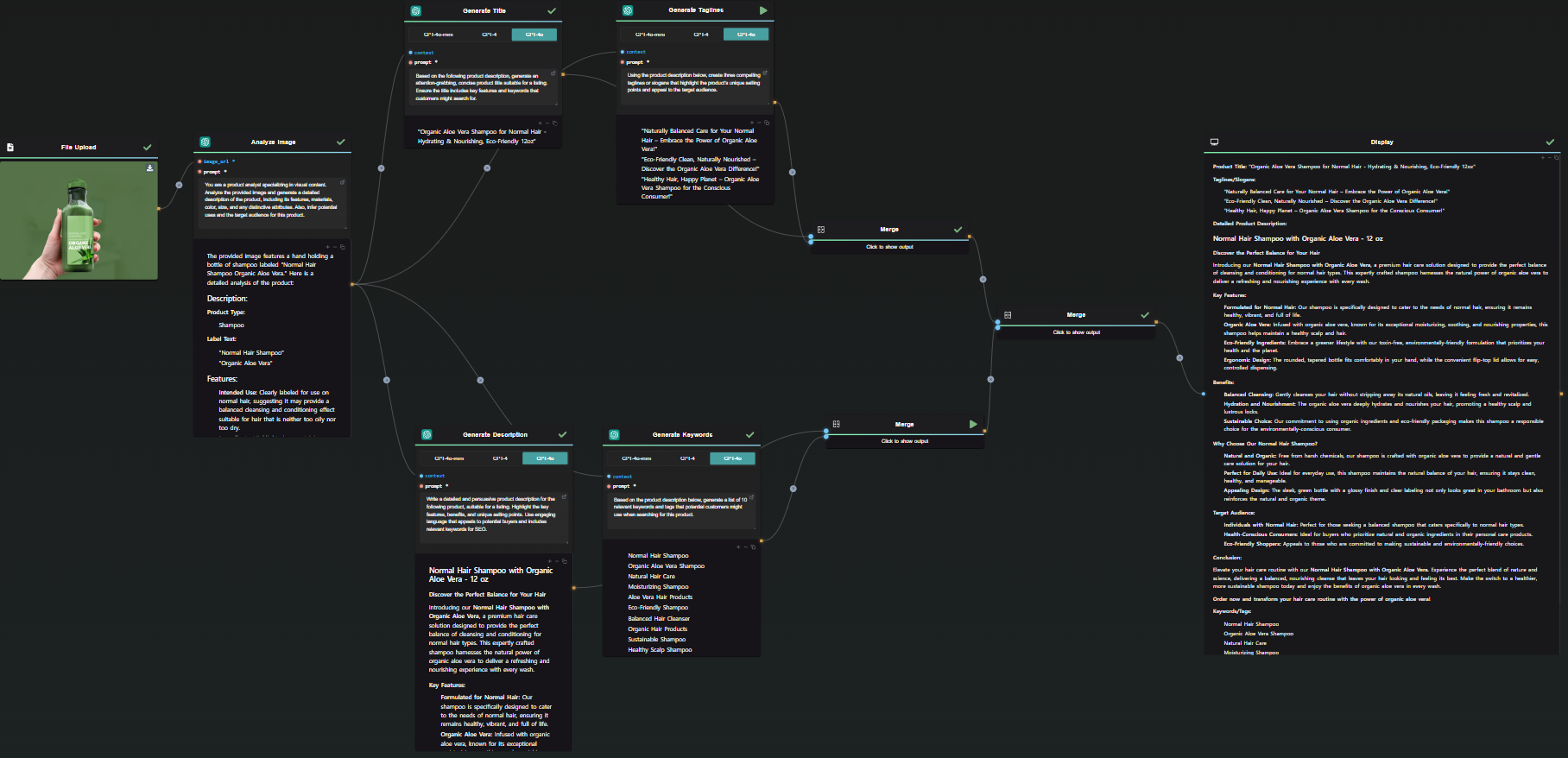

The "Generate Product Description" template within AI-Flow is specifically designed to assist users in creating high-quality, SEO-friendly product descriptions. This powerful tool harnesses the capabilities of multiple AI models to produce descriptions that are not only informative but also engaging and tailored to your target audience.

Imagine you have a new product—a hydrating, nourishing shampoo infused with organic aloe vera. Crafting the perfect product description is your next step. Rather than struggling with writer's block or settling for generic, uninspired content, you can leverage the AI-Flow template to generate a compelling description that resonates with potential customers.

How to Use AI-Flow for SEO-Optimized Product Descriptions

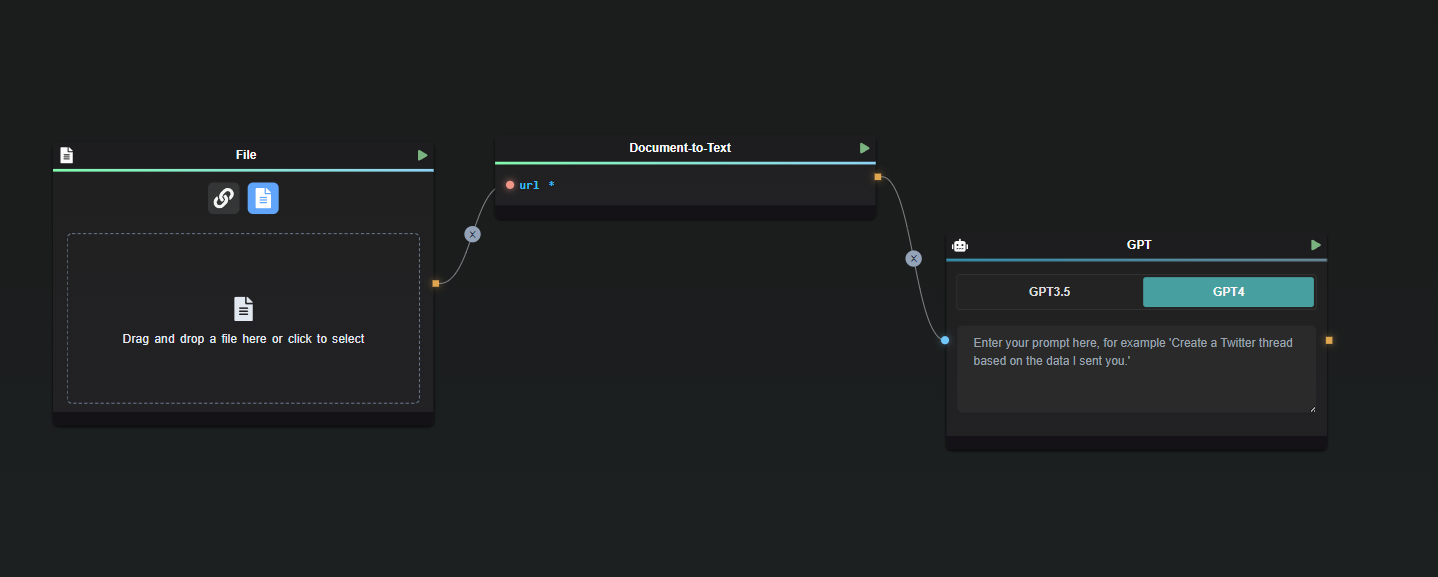

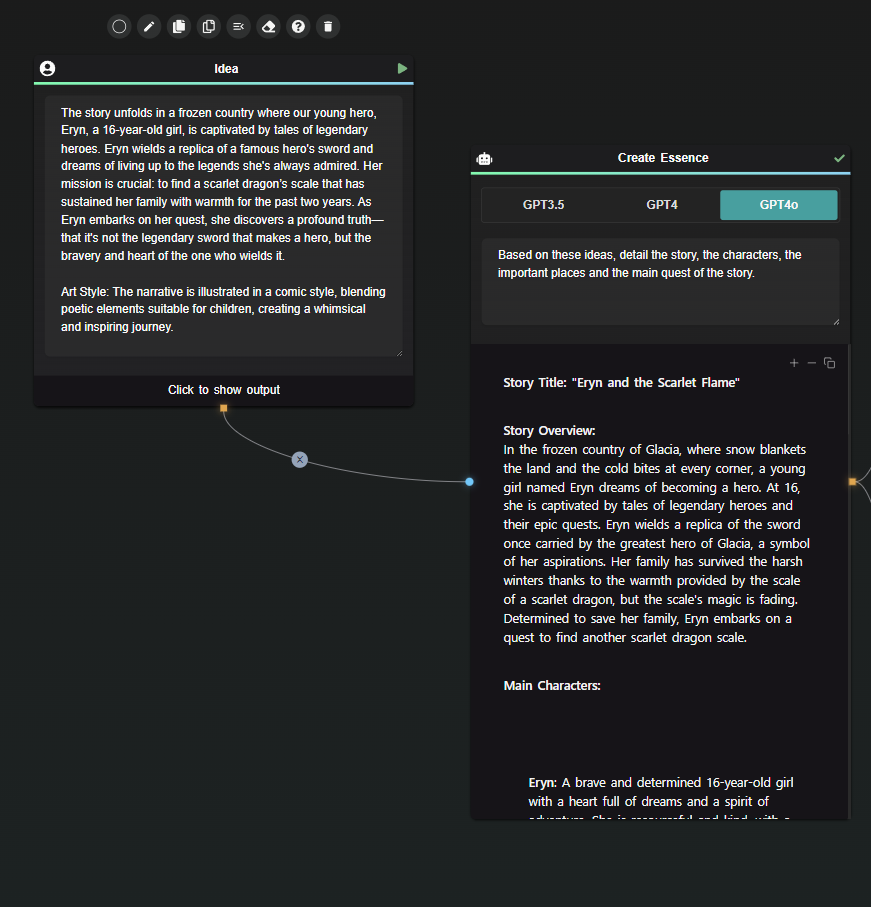

Step 1: Upload Your Product Image

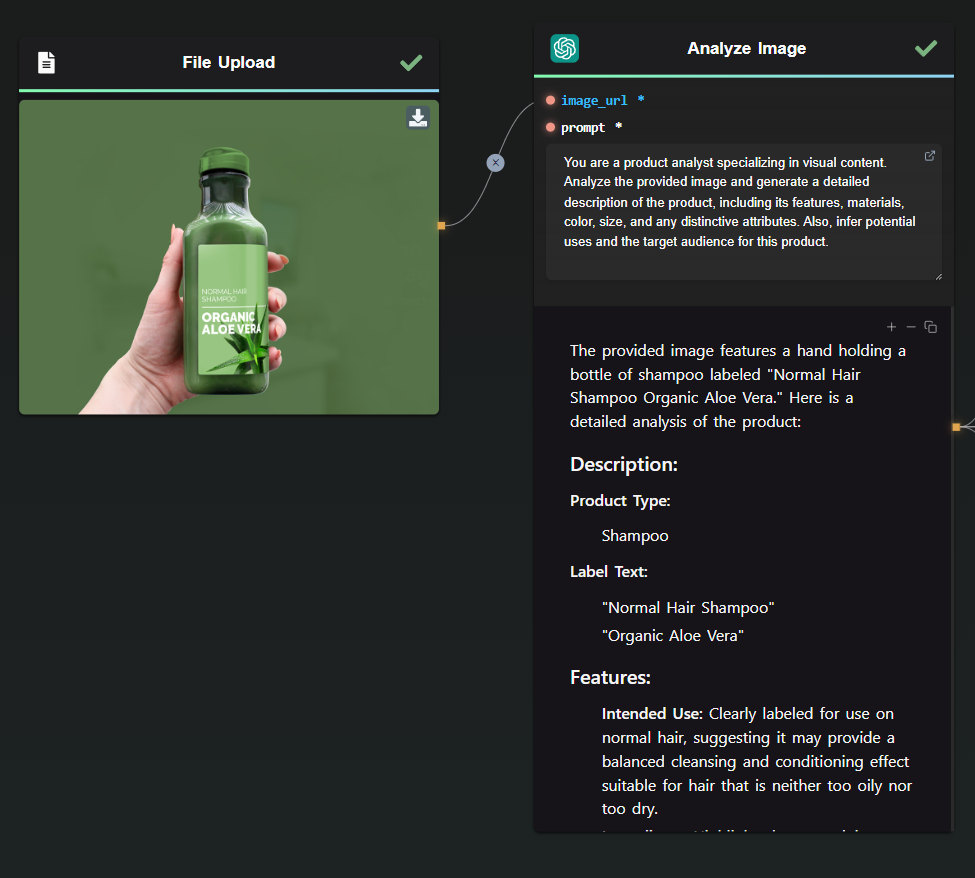

Start by uploading a clear image of your product. For our example, you would upload a photo of the shampoo bottle.

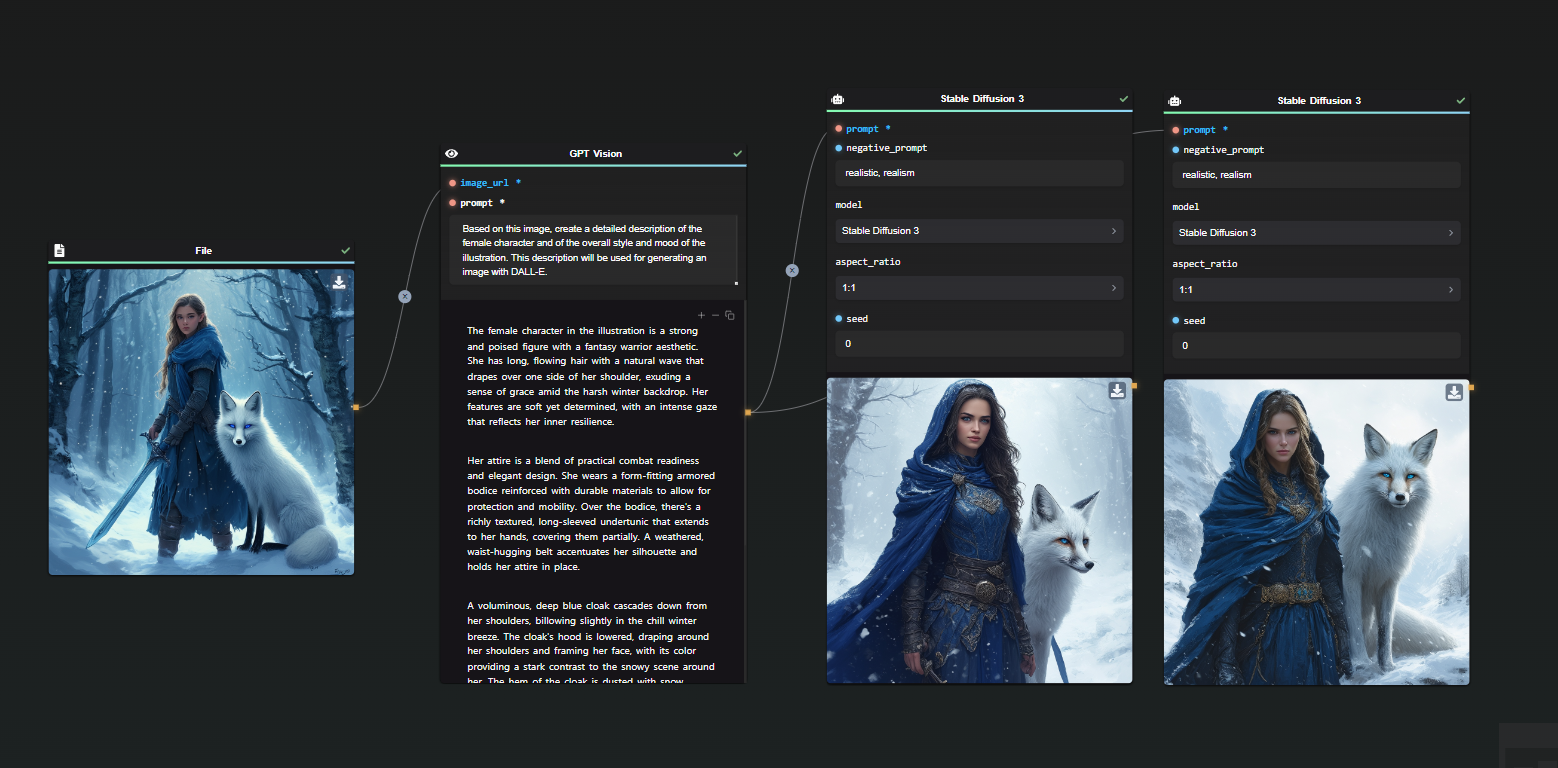

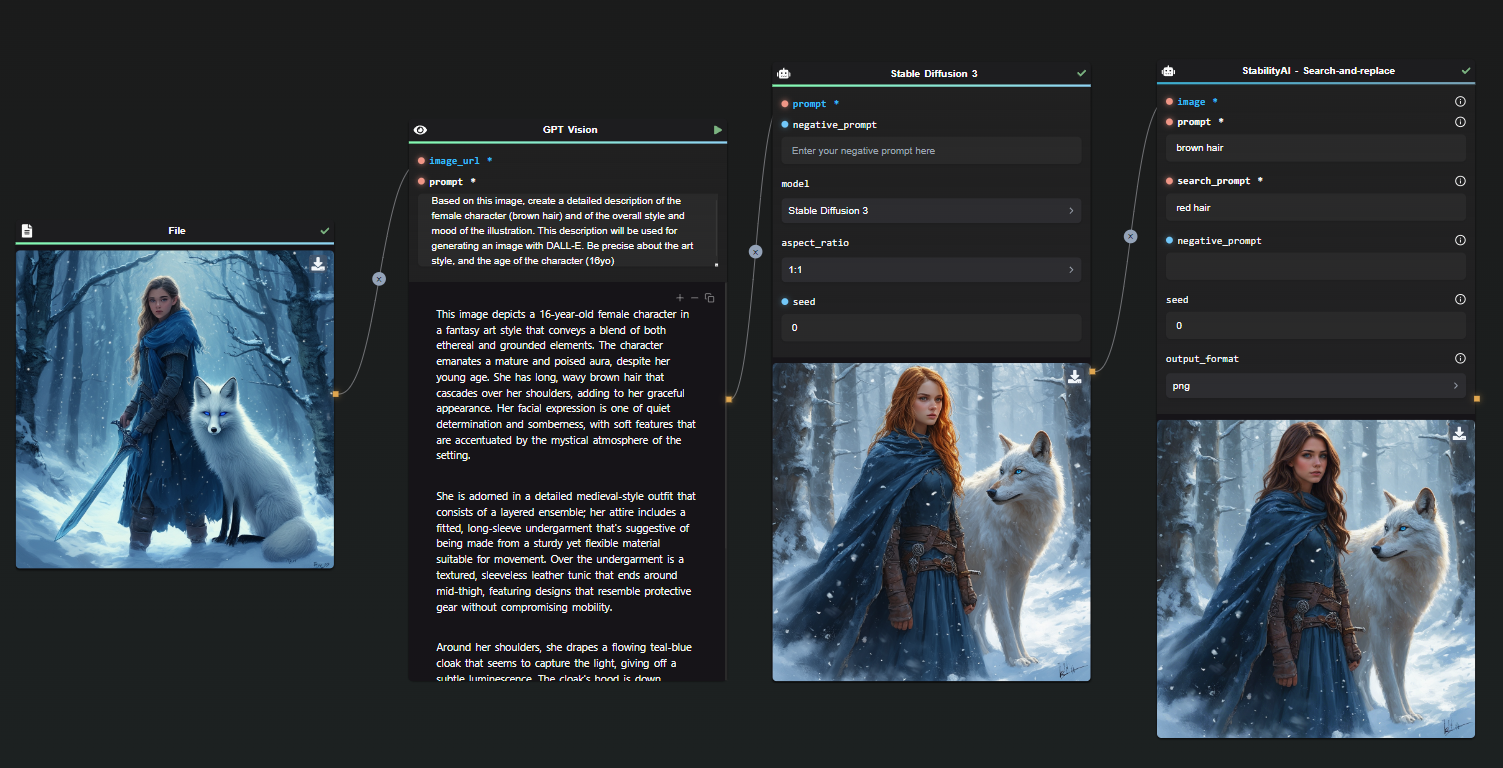

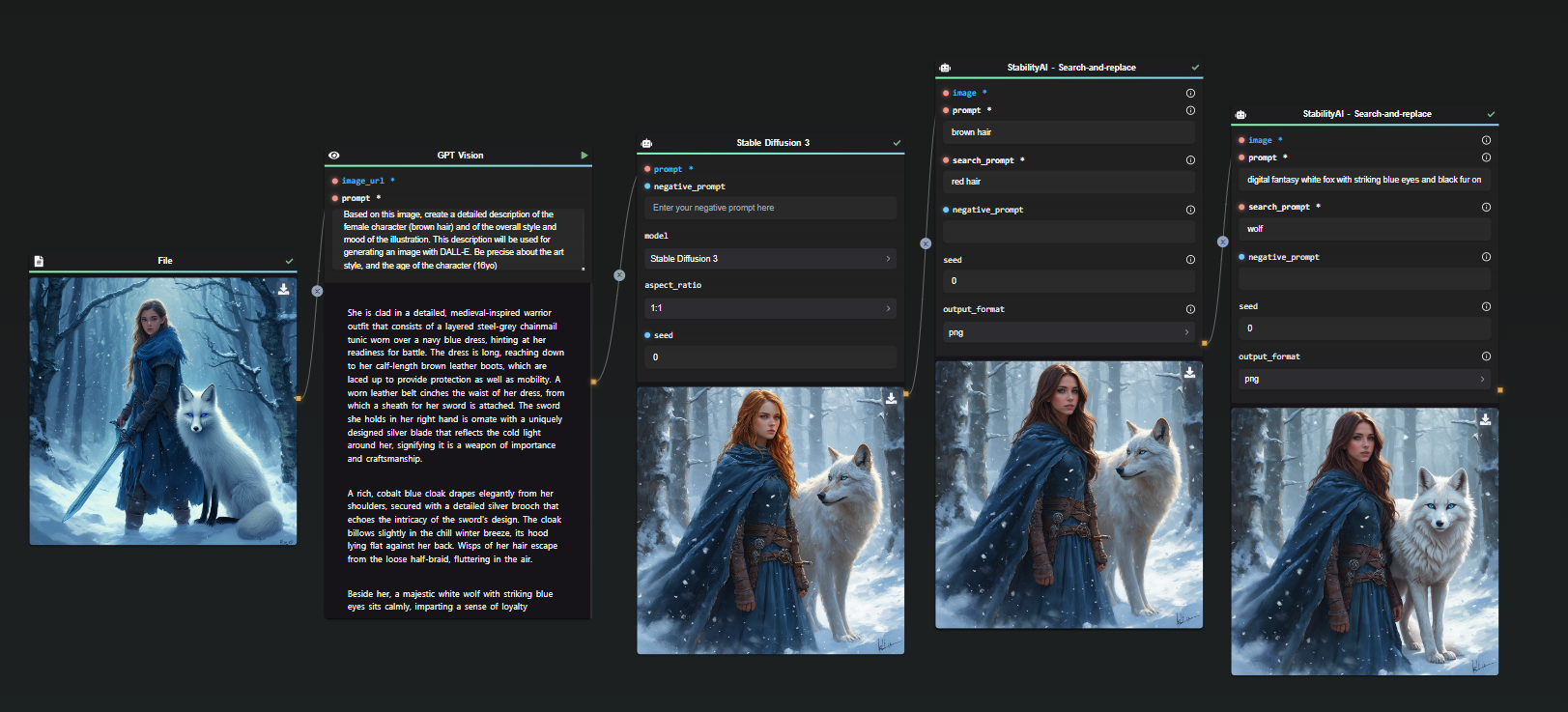

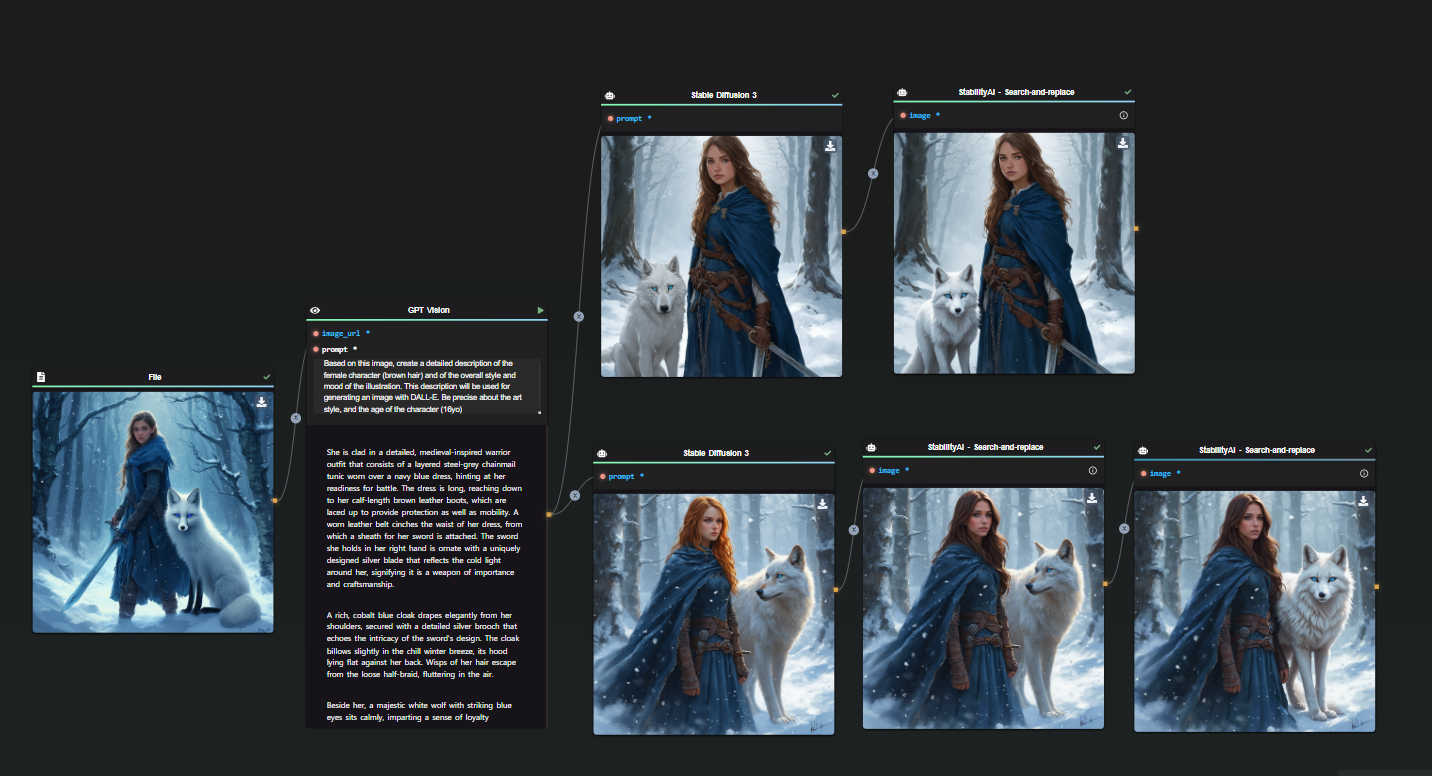

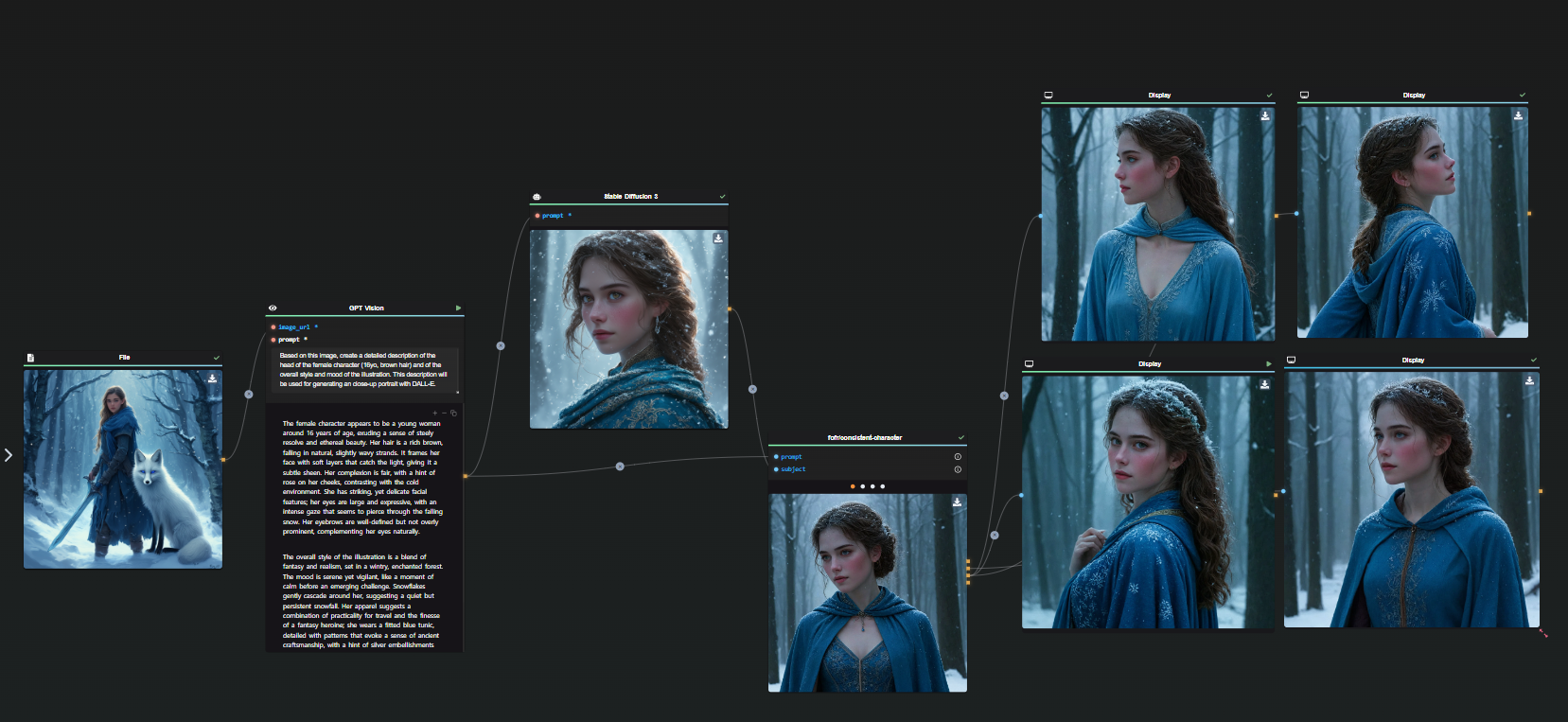

Step 2: Analyze the Image

GPT-Vision node's image recognition capabilities come into play here. The platform analyzes the uploaded image, identifying the product type and key features visible on the label. This is a crucial step that lays the foundation for generating a description that accurately represents your product.

Step 3 (Optional): Provide Contextual Information

The template works with just one image, but you can customize the prompts or add other steps to input additional details about the product, such as its intended use, unique ingredients, and key benefits. For our shampoo example, you could include information about its organic ingredients, its suitability for normal hair, and its hydrating properties.

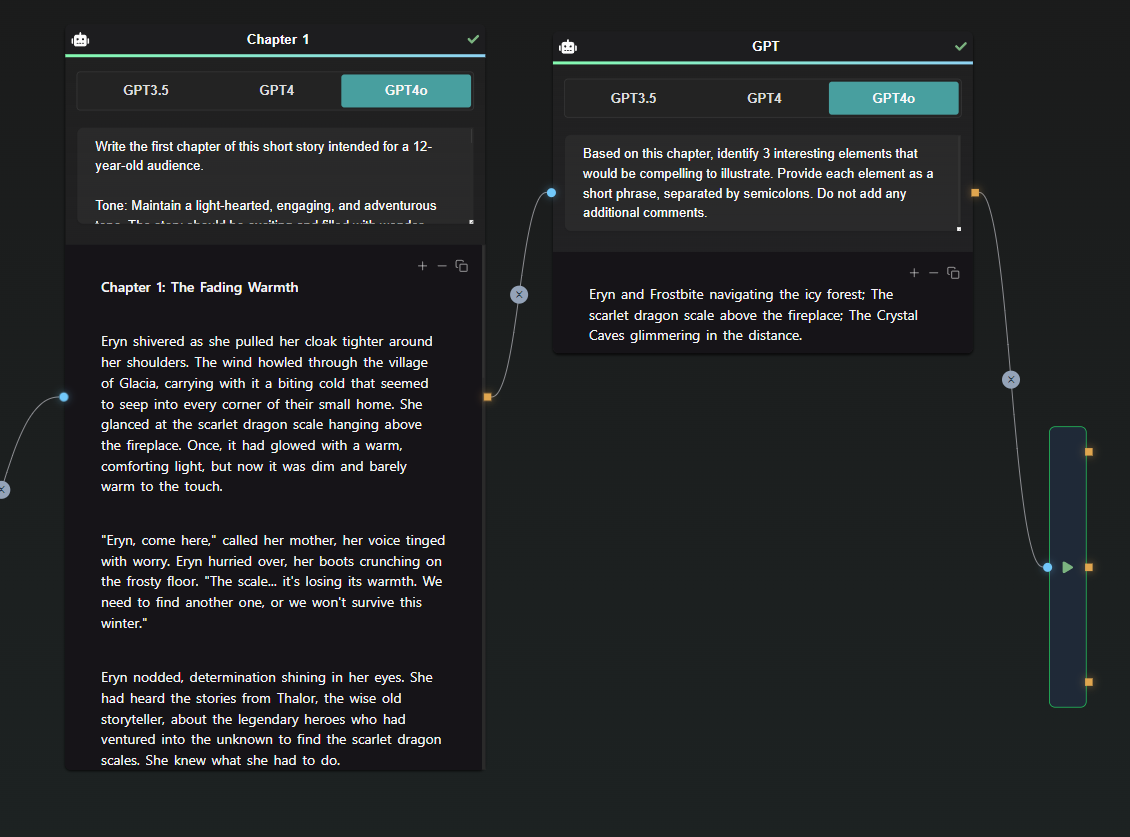

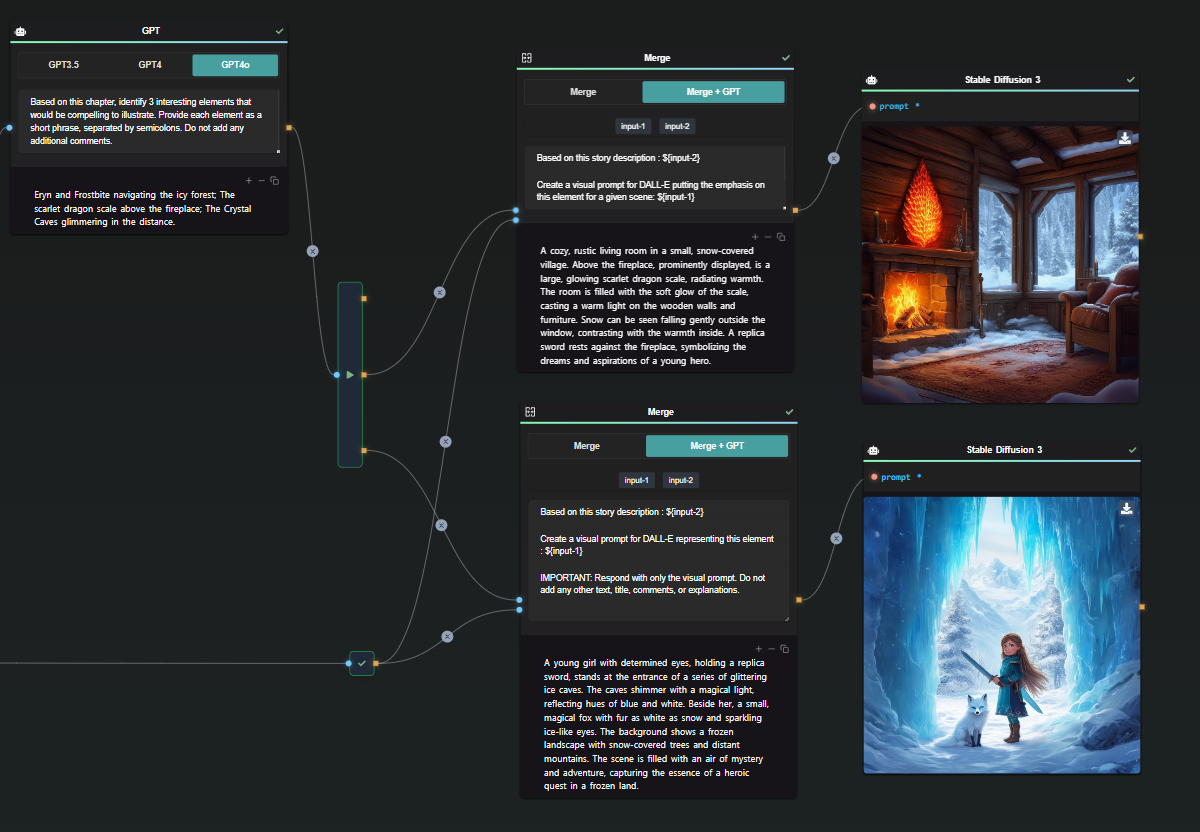

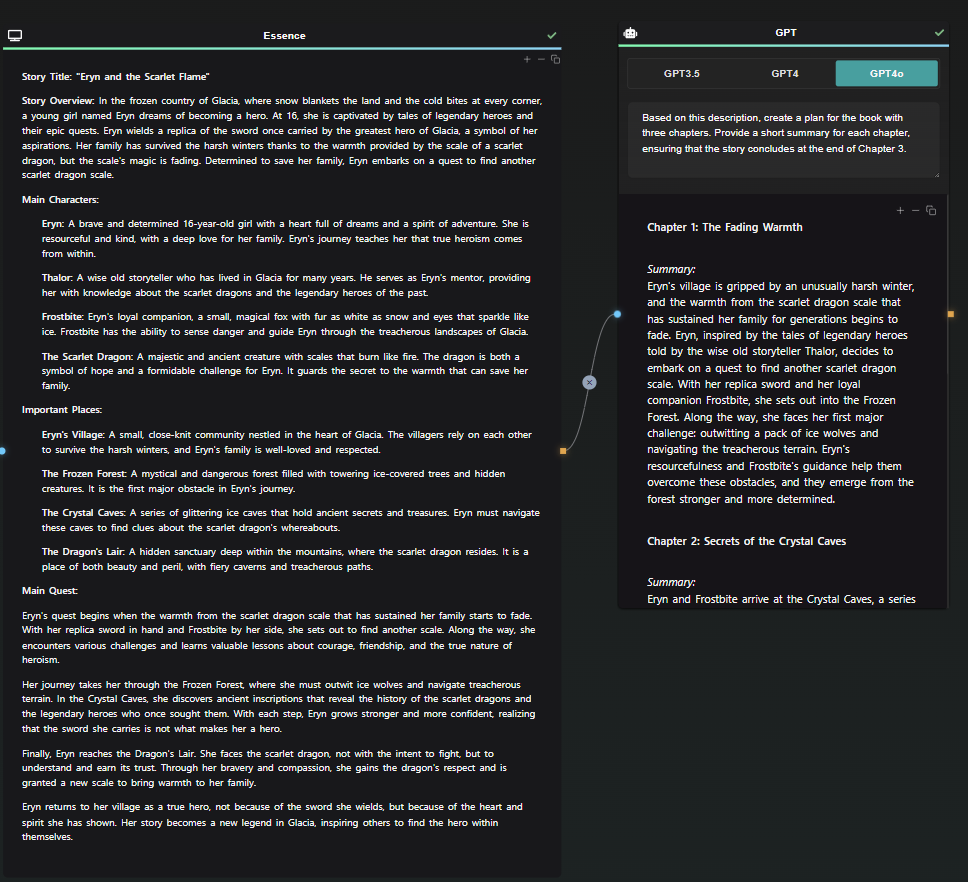

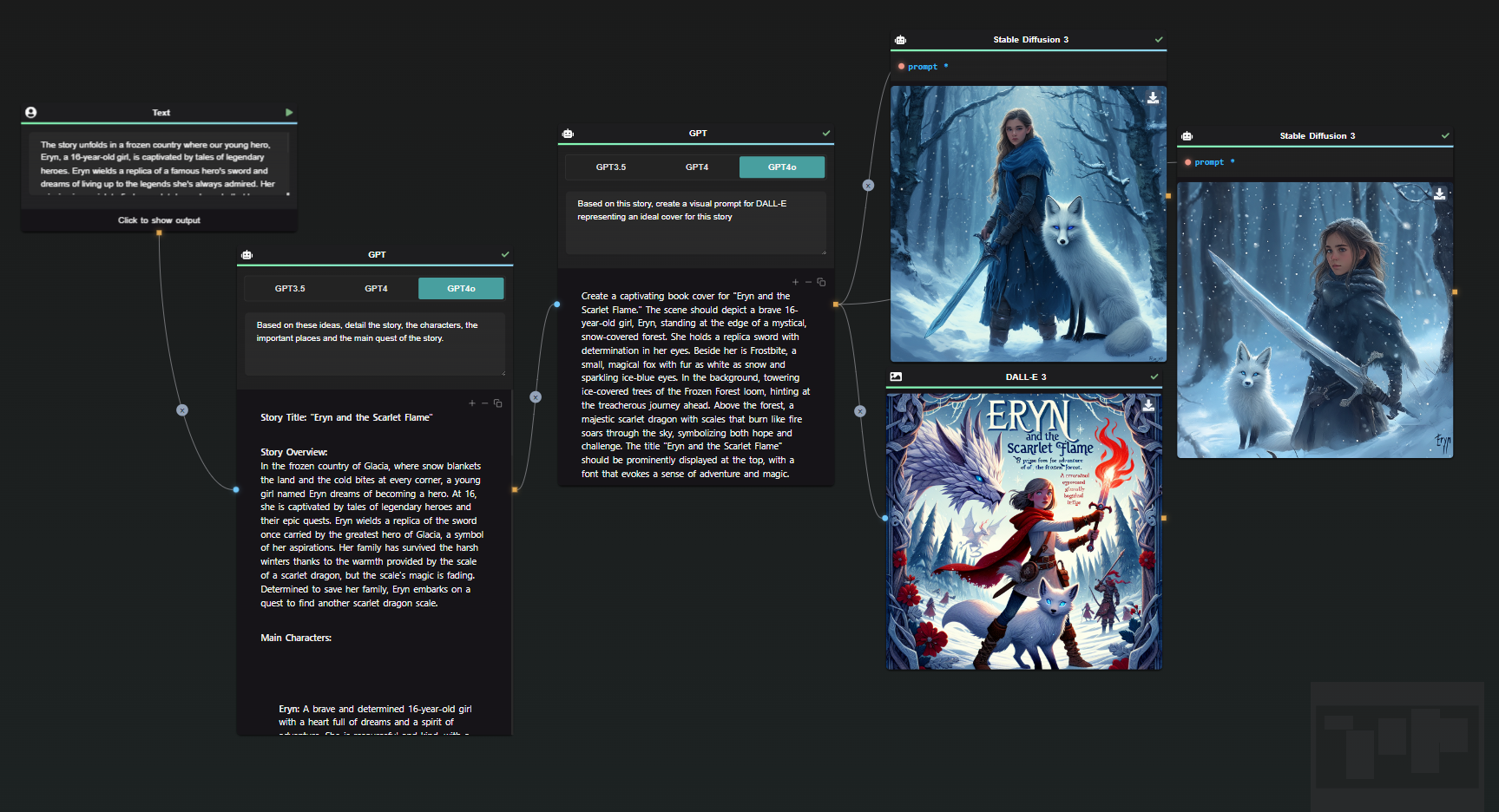

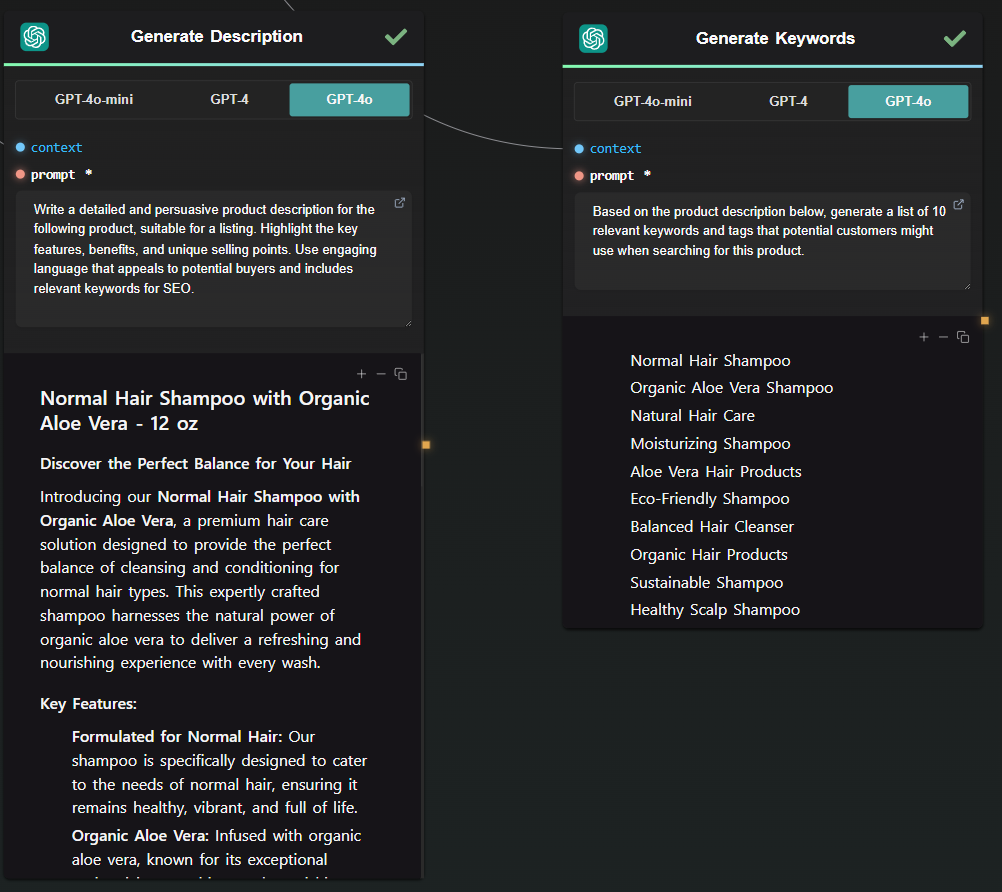

Step 4: Generate Titles, Taglines, and Descriptions

With a few clicks, AI-Flow produces a set of SEO-optimized titles, taglines, and full product descriptions. The generated content not only highlights the unique selling points of your product but also appeals to search engines, helping you rank higher on search results pages.

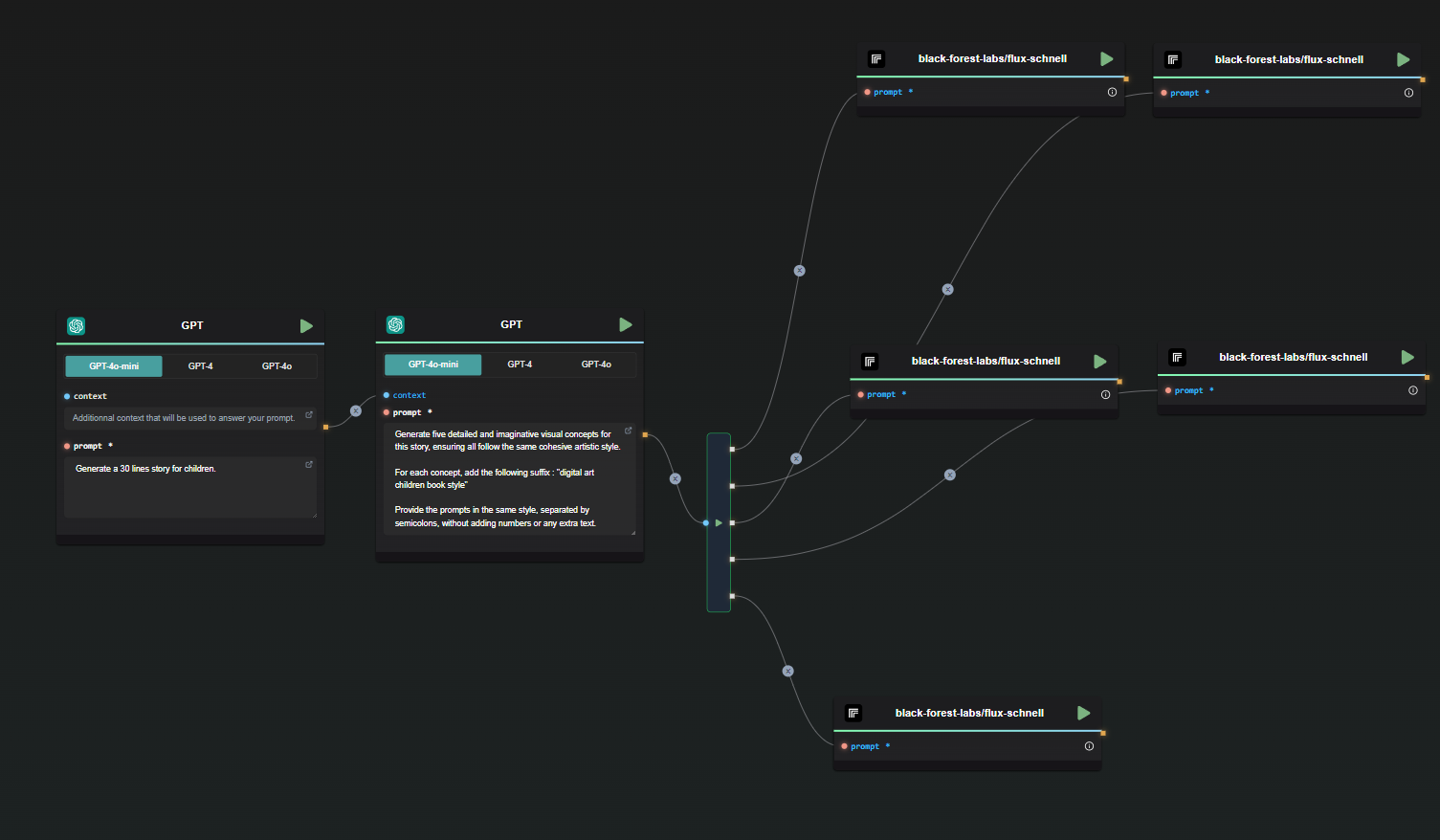

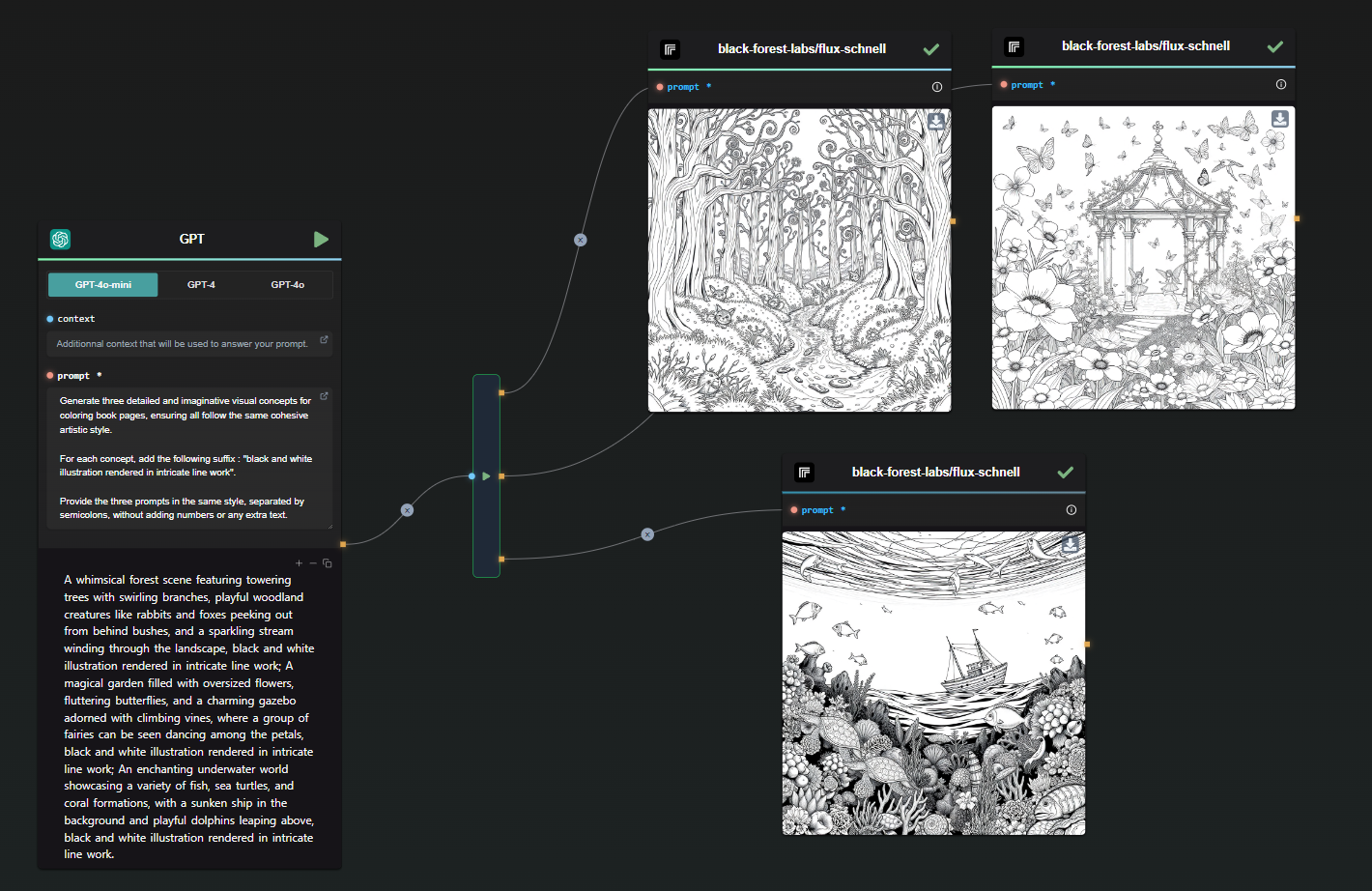

Adding Value Through Customization

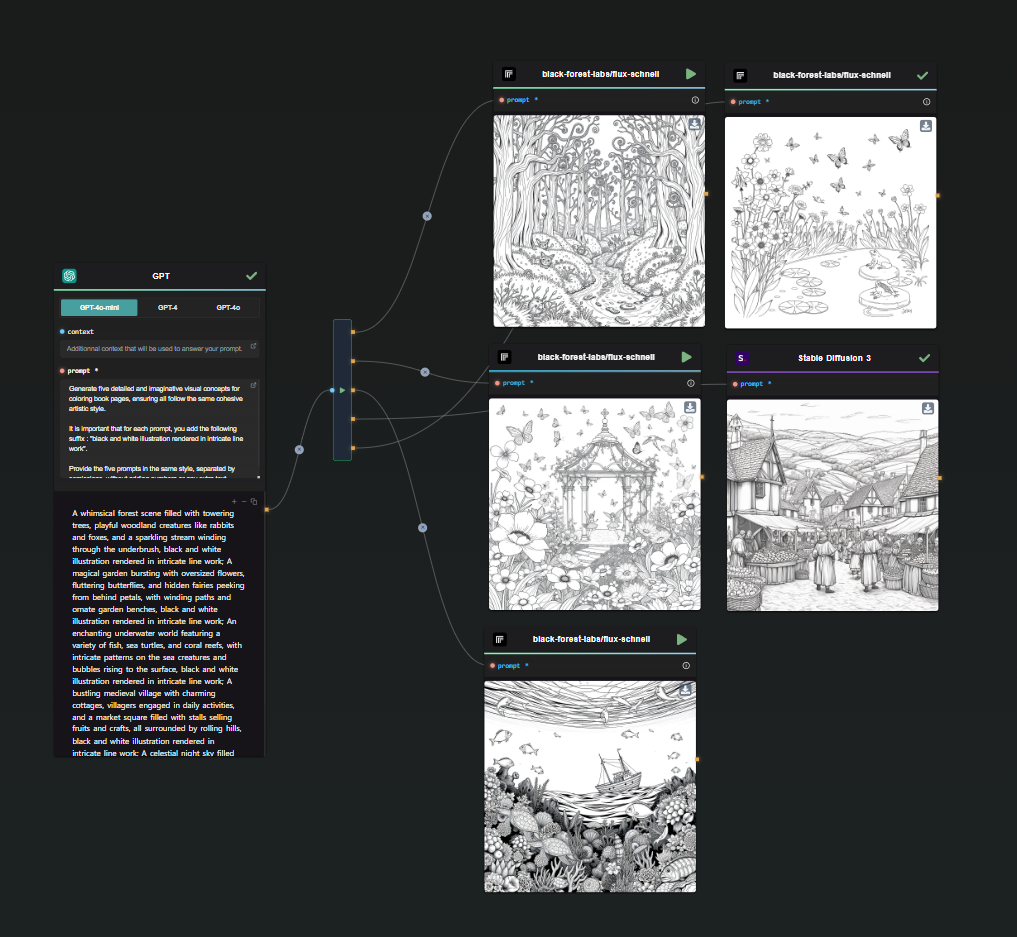

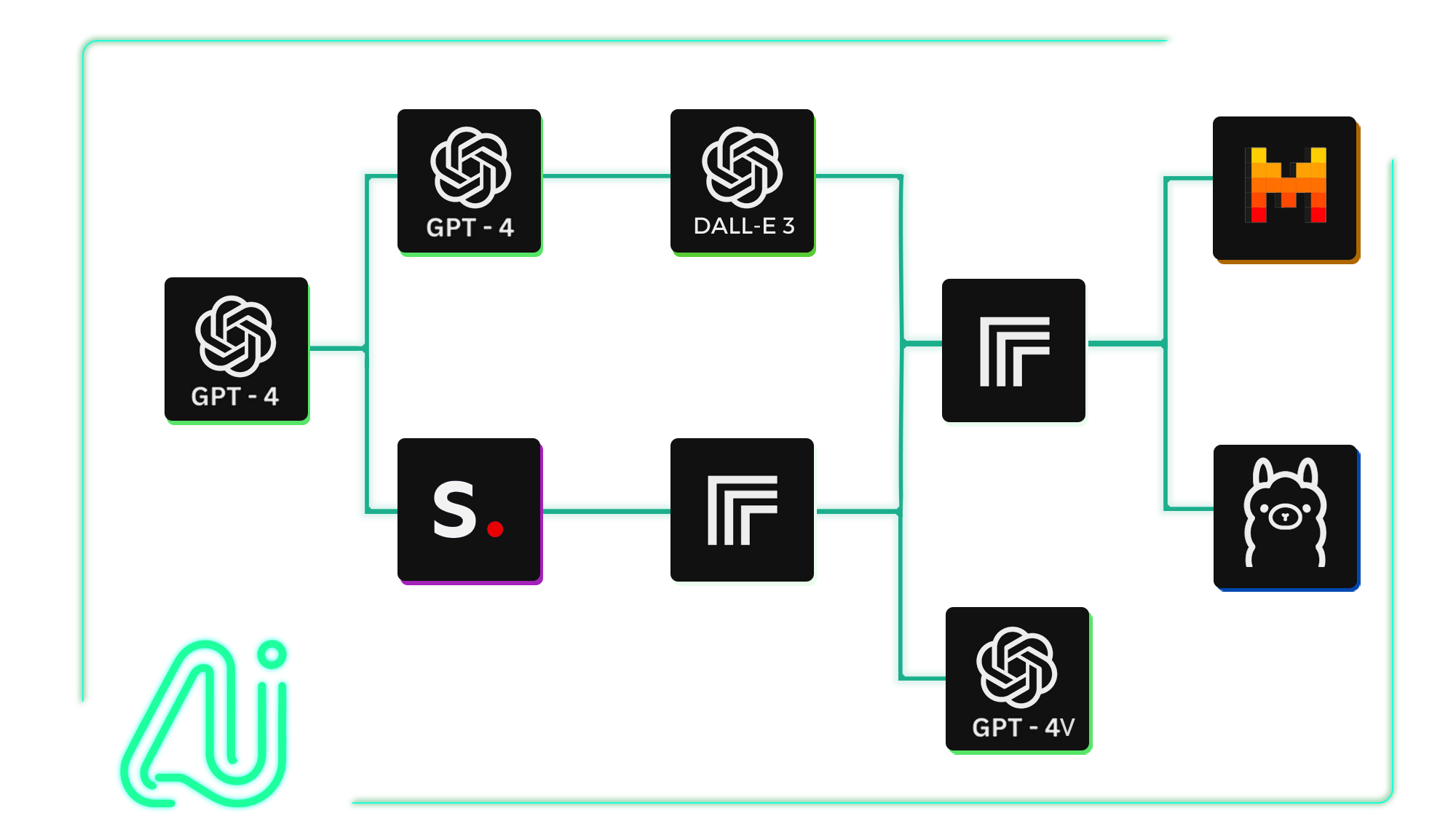

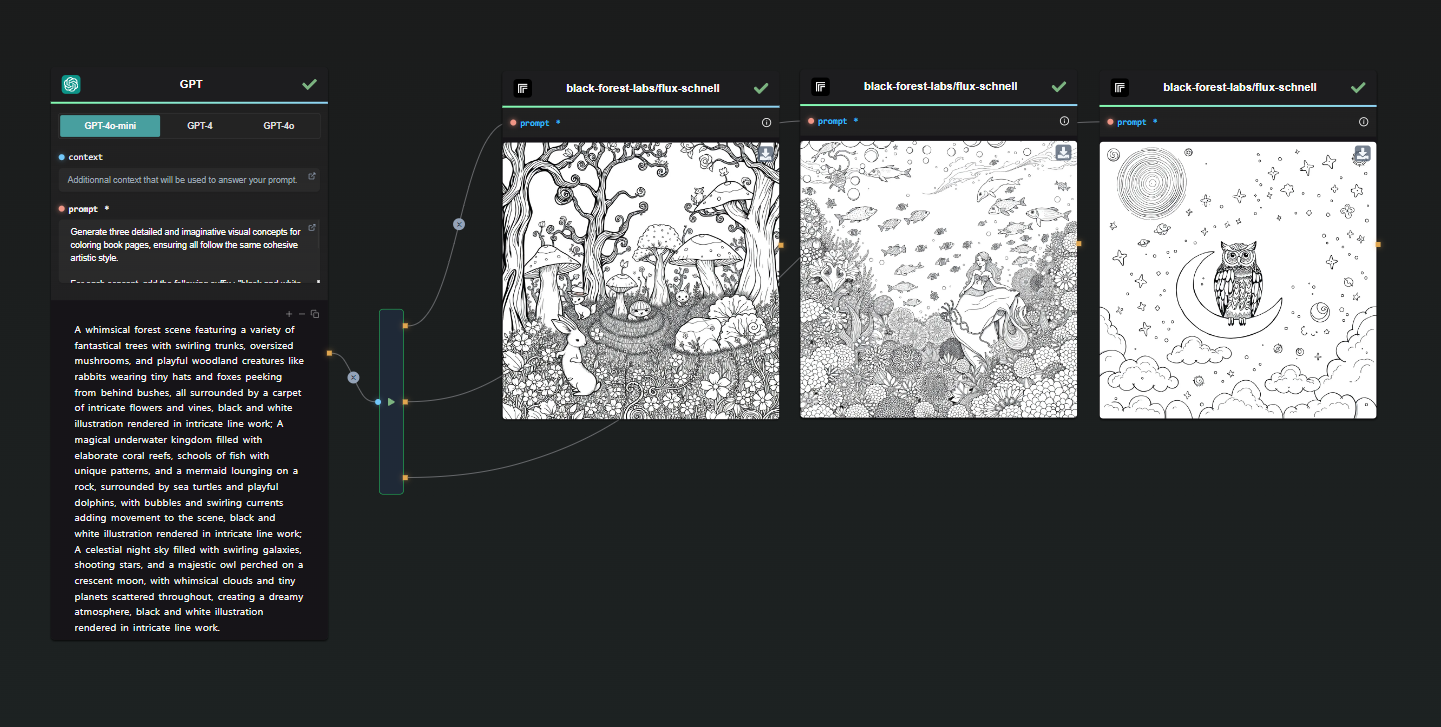

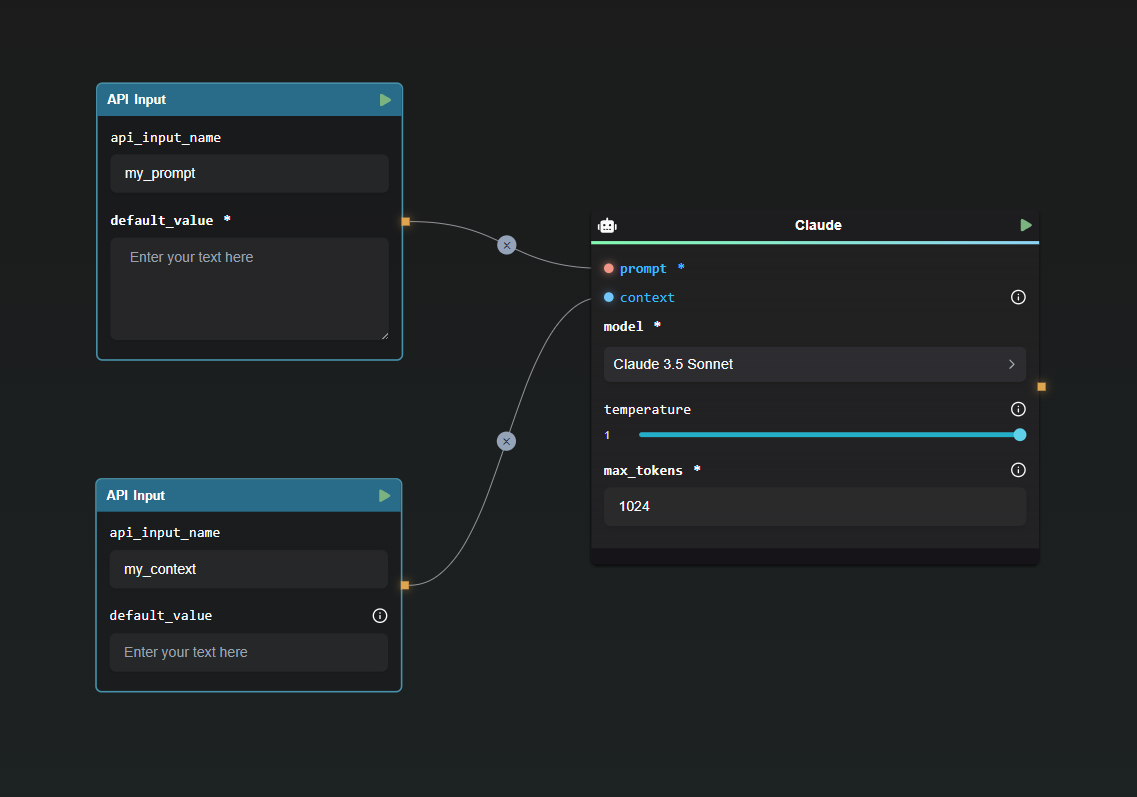

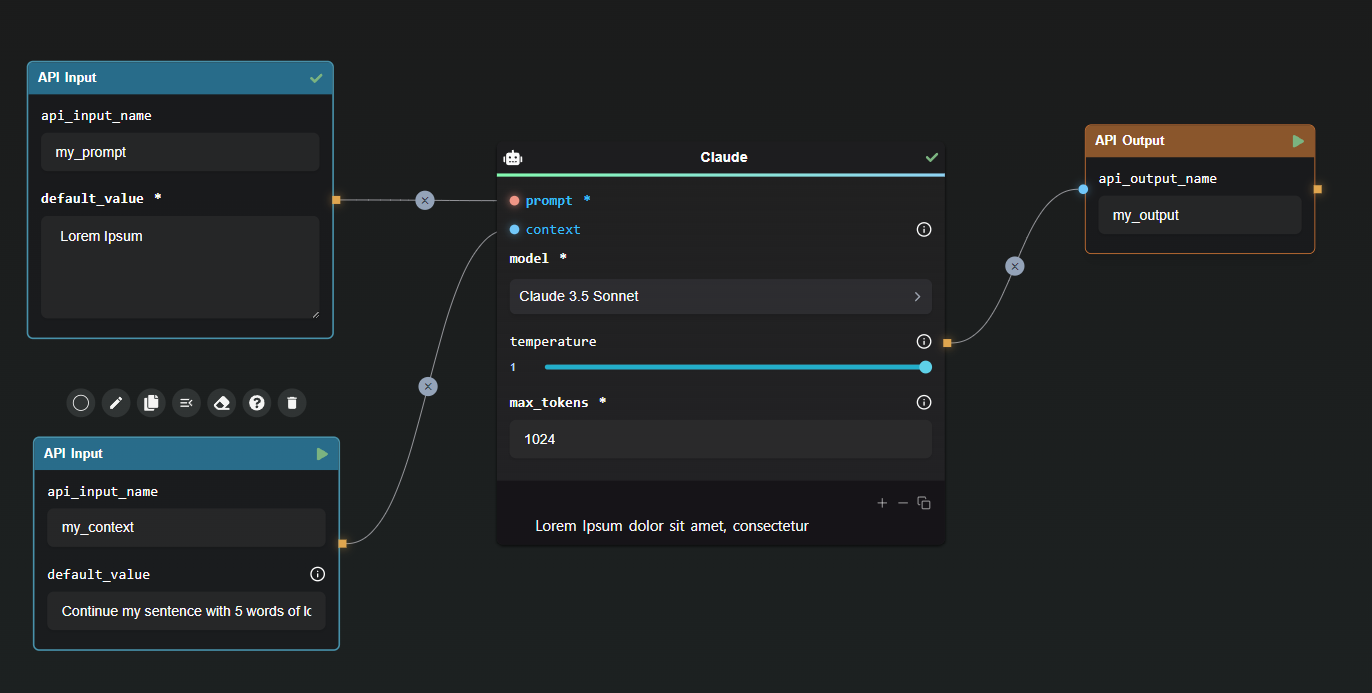

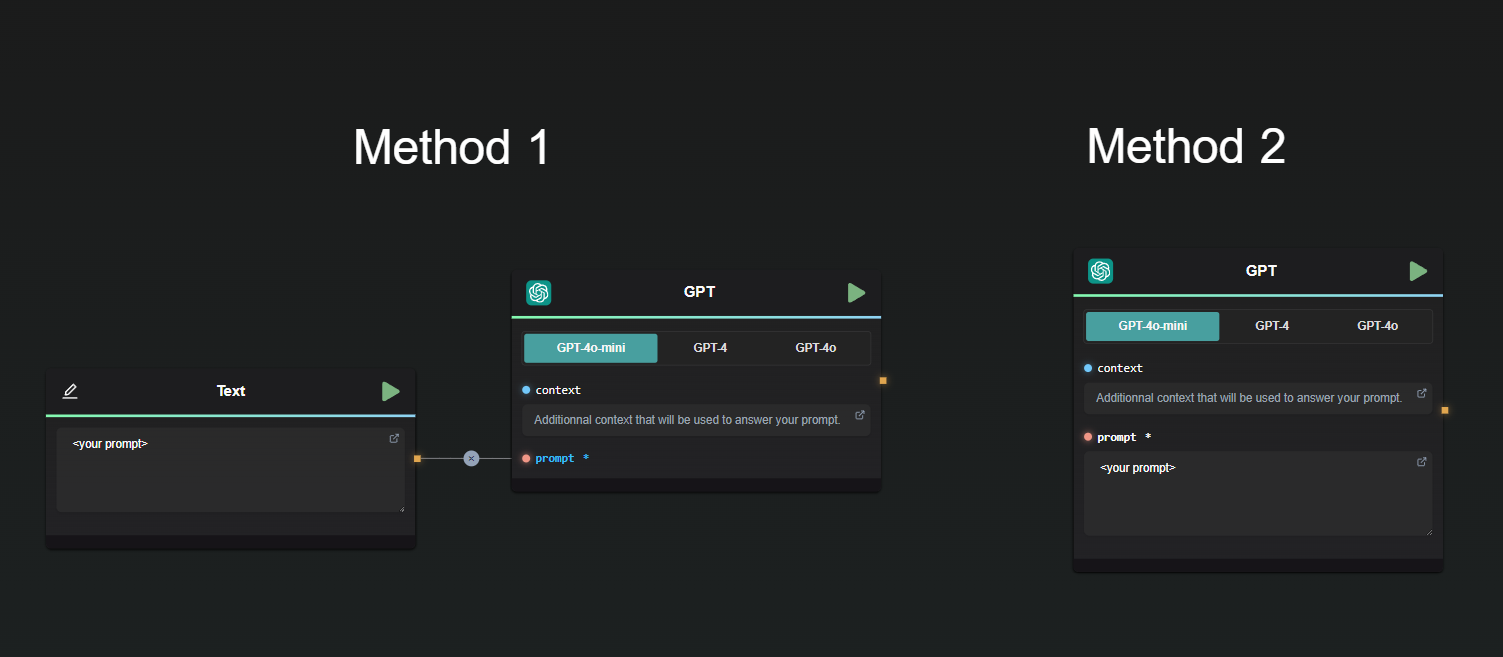

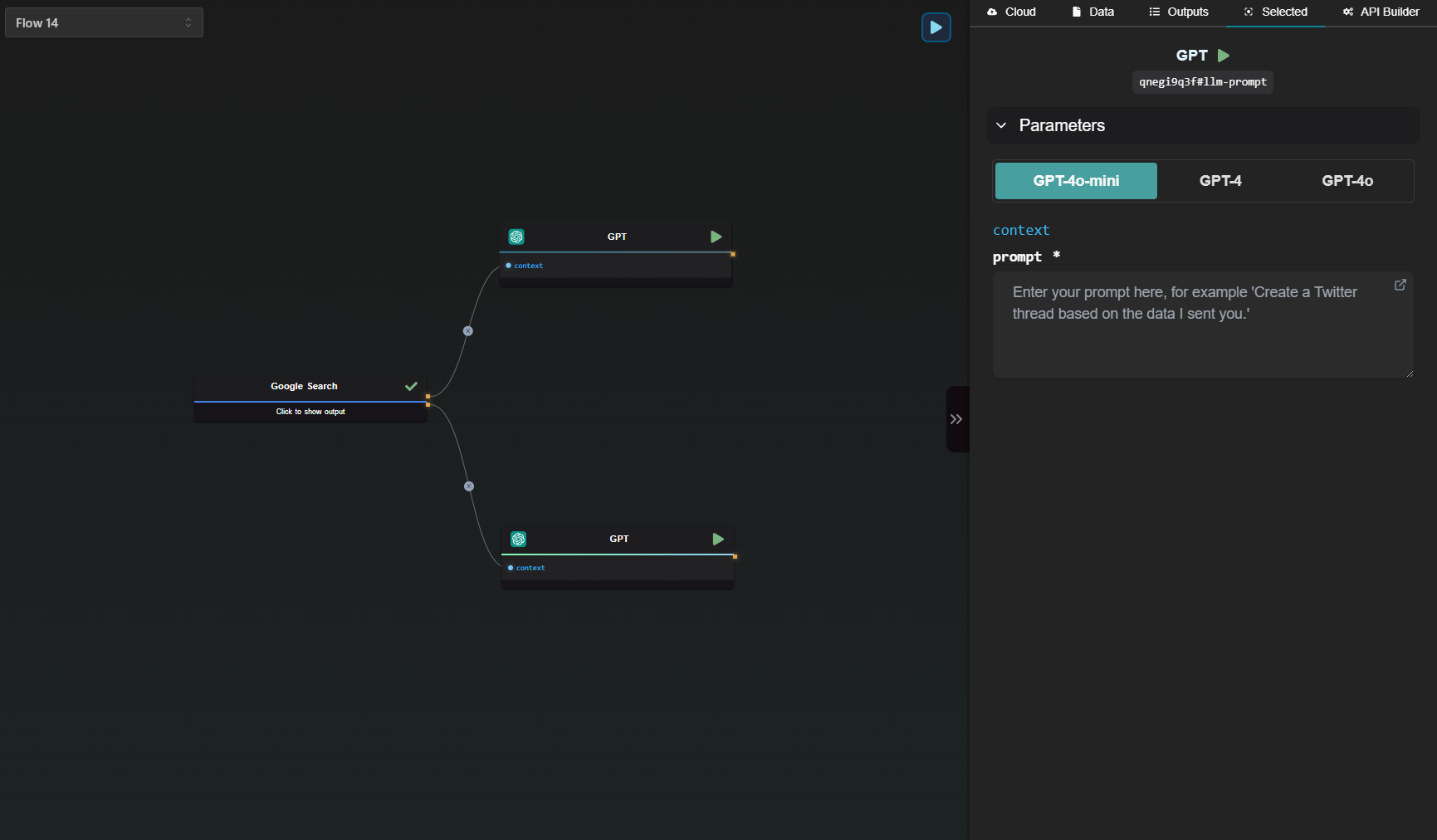

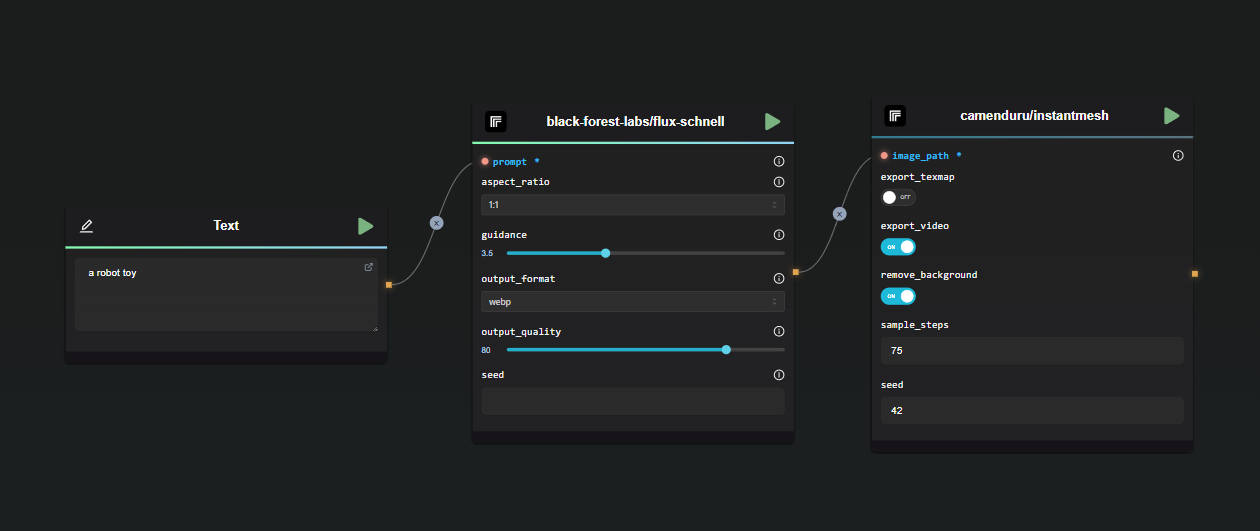

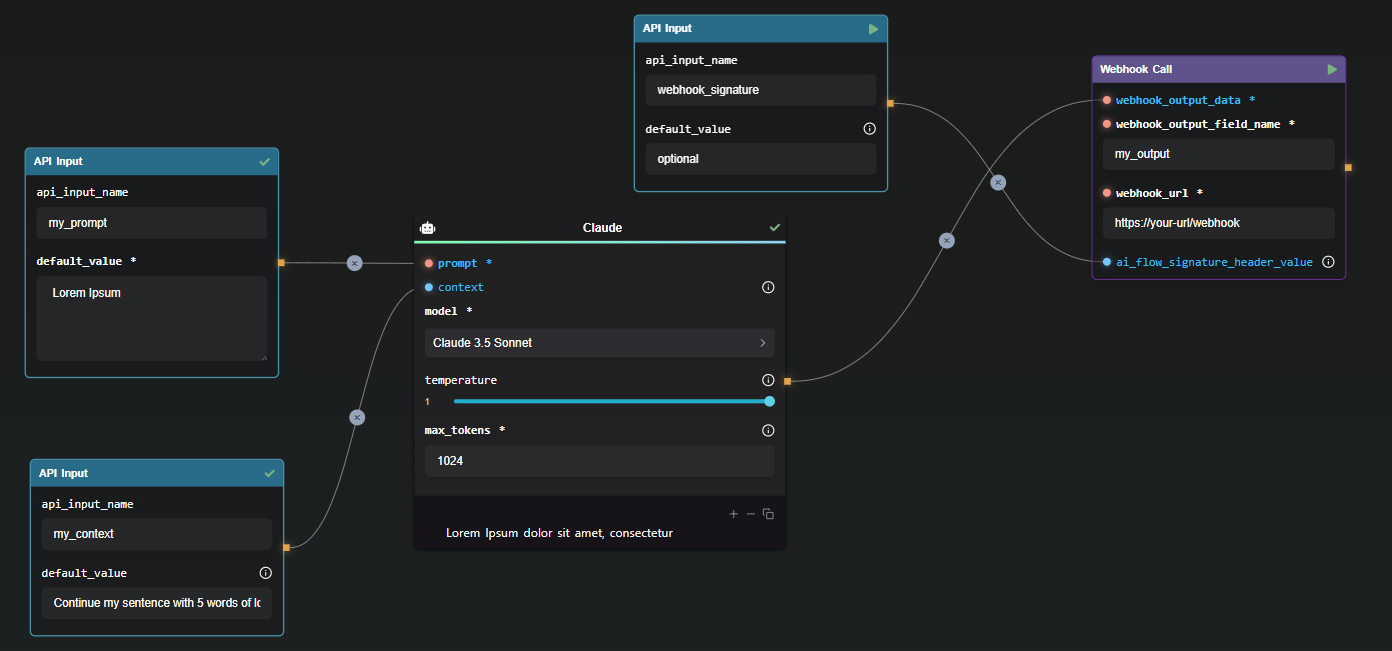

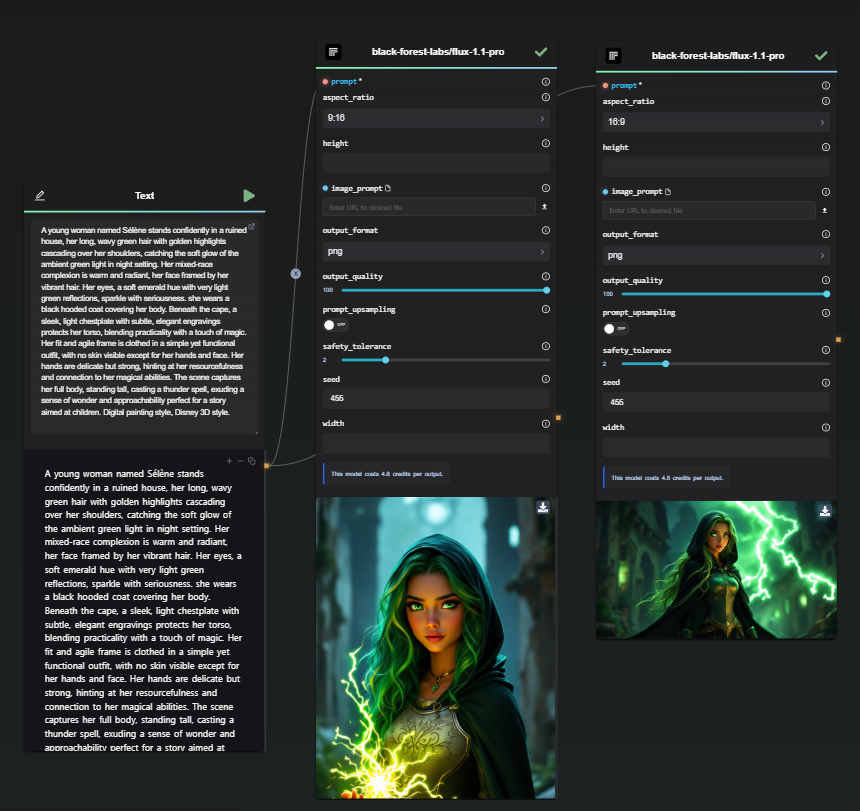

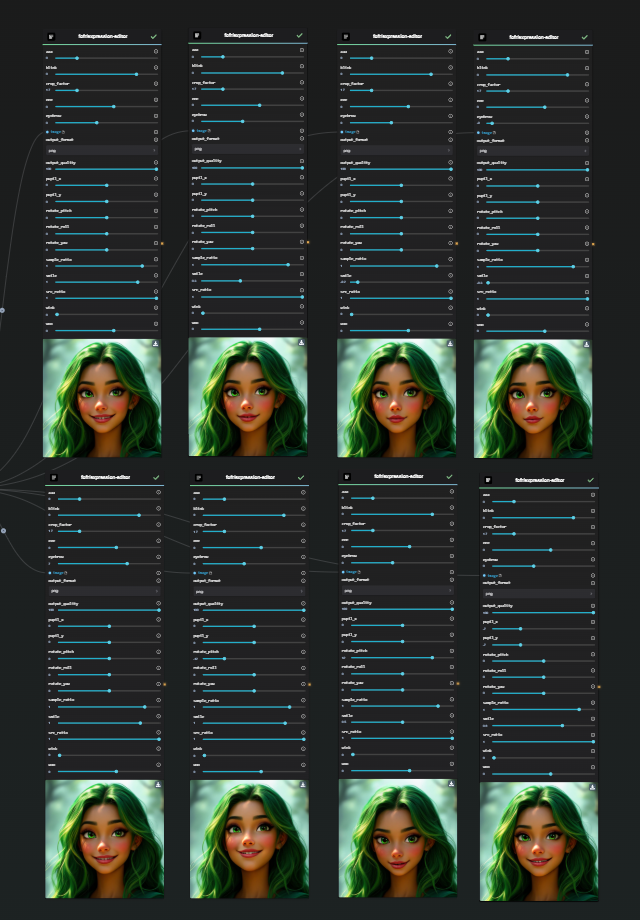

One of the standout features of the AI-Flow platform is its user-friendly drag-and-drop interface. Users can customize their workflows by adding nodes representing different AI models, each contributing unique features and enhancements. For instance, while GPT-4 might handle the text generation, you can add another node to refine the tone and style to best suit your brand.

Moreover, users can experiment with different AI models to tailor the output perfectly to their needs. This flexibility enables the production of descriptions that align with your brand voice, providing a consistent and professional touch to your product listings.

The Benefits of a Structured AI Workflow

Many entrepreneurs already use tools like ChatGPT for creating content. This aligns with the broader trend, as McKinsey's 2024 AI Report indicates that 65% of organizations now utilize generative AI in at least one business function—a significant increase from just a year ago. Notably, marketing and sales departments have seen their adoption rates more than double, emphasizing the crucial role AI plays in driving eCommerce success.

The structured workflow provided by AI-Flow offers numerous advantages:

- Consistency: Seamlessly integrate multiple AI models to produce cohesive and polished content.

- Efficiency: Automate repetitive tasks, freeing up more time for creative and strategic activities.

- Customization: Easily adapt and refine your workflows to match evolving business needs and customer expectations.

Your Path to Engaging Product Descriptions

By using the "Generate Product Description" template in AI-Flow, you can transform your content creation process. Whether you're launching a new product or updating existing descriptions, this template offers a streamlined, efficient, and highly effective solution.

Why struggle with mundane writing tasks when you can focus on what you do best—innovating and growing your business? Leverage the power of AI-Flow to produce captivating descriptions that turn browsers into buyers and elevate your online presence.

Get Started Now

Explore the capabilities of AI-Flow today and see how the "Generate Product Description" template can bring your products to life. Visit AI-Flow to start your journey for free.

Additional Resources

For more detailed information, refer to the following resources: